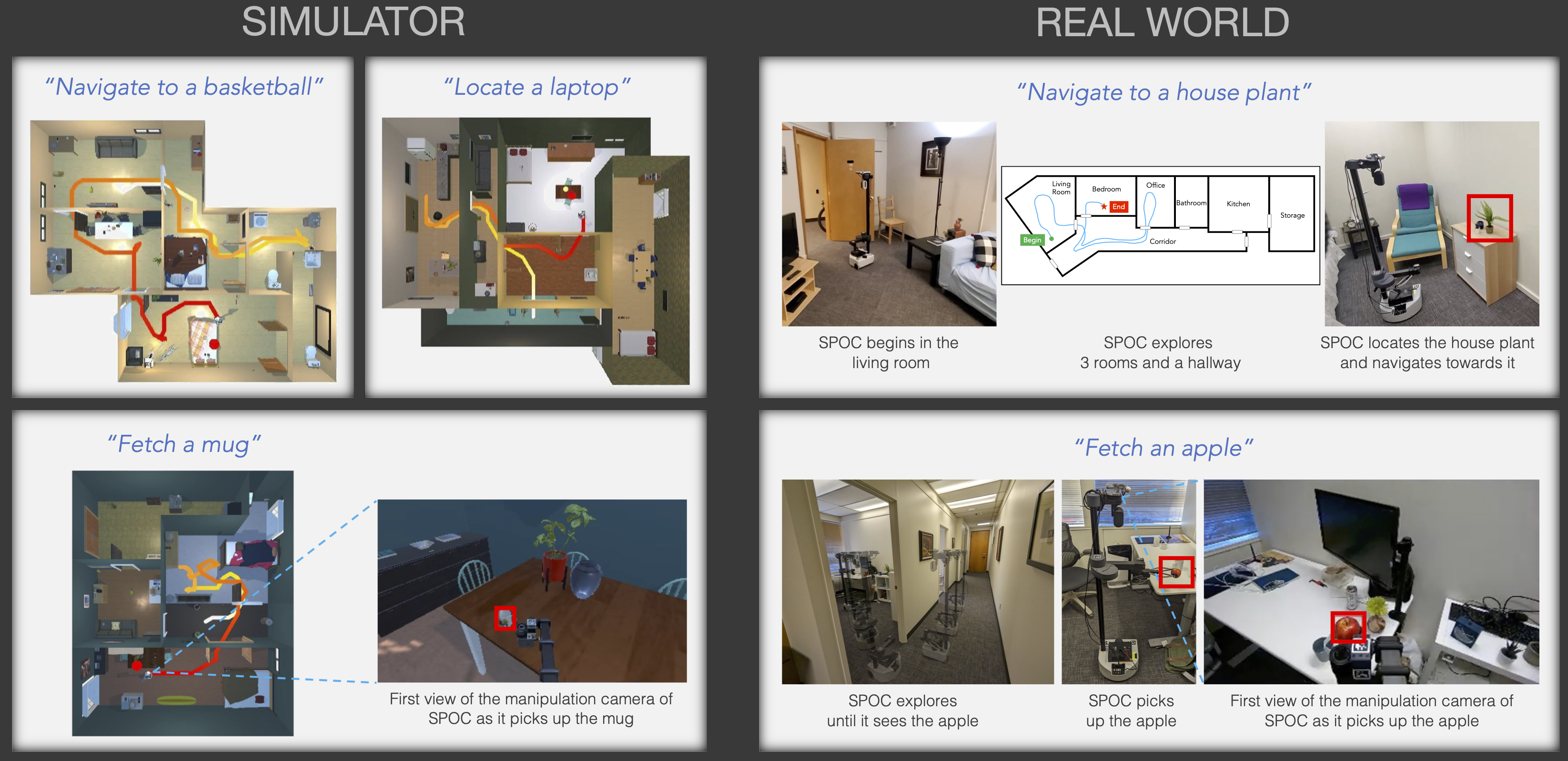

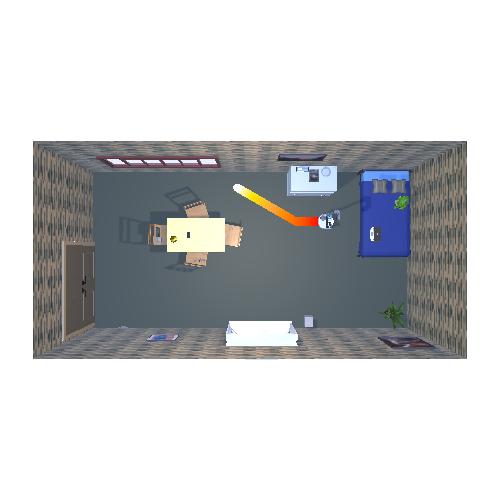

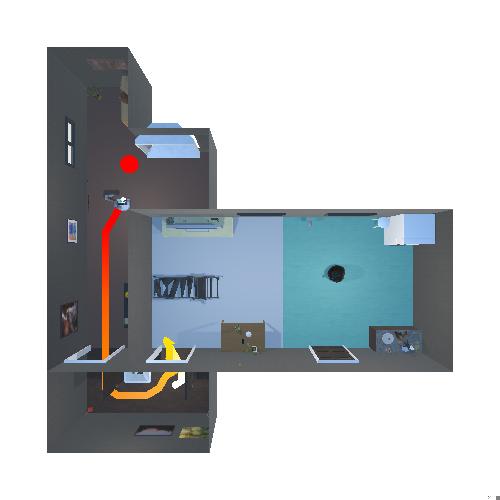

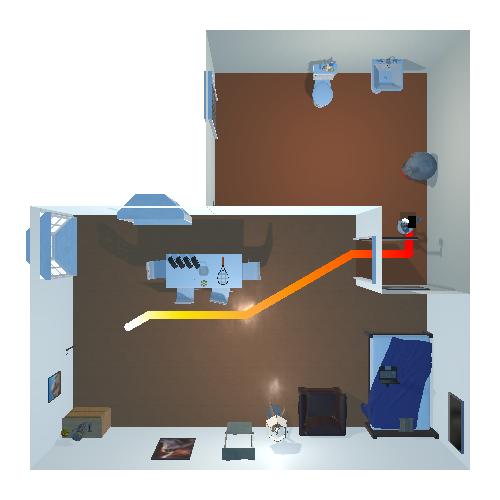

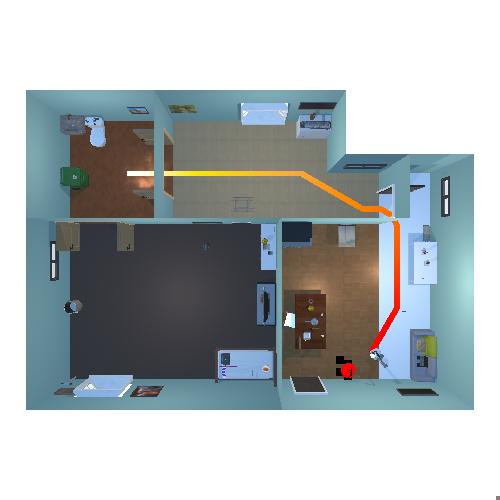

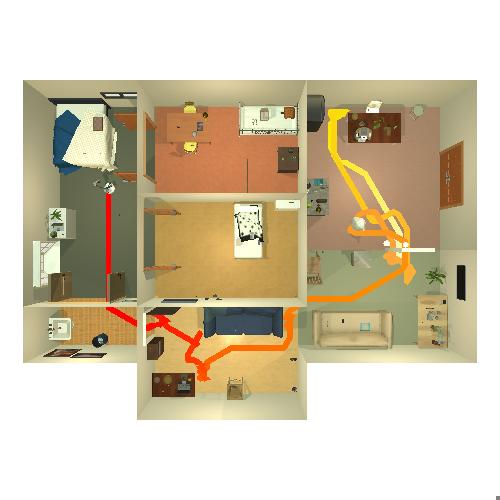

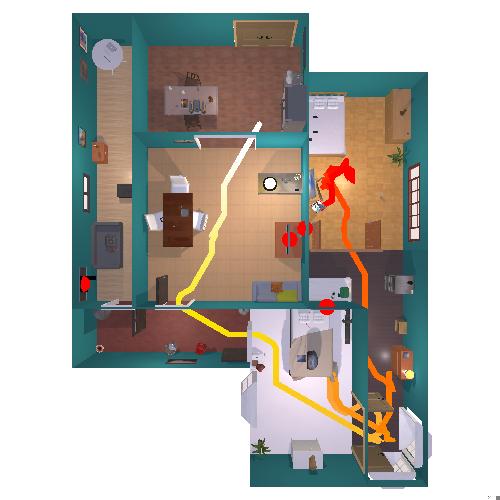

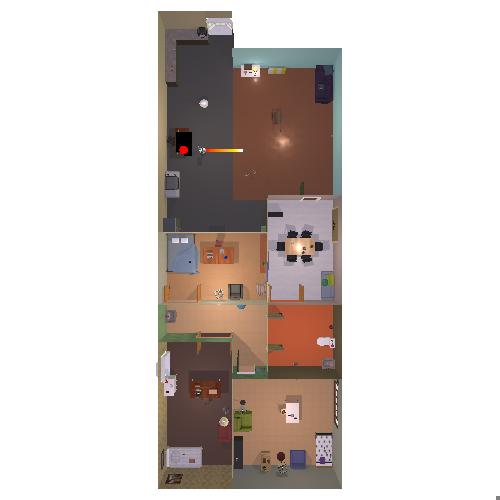

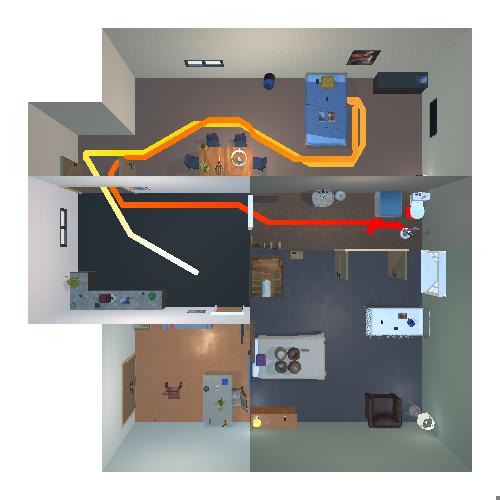

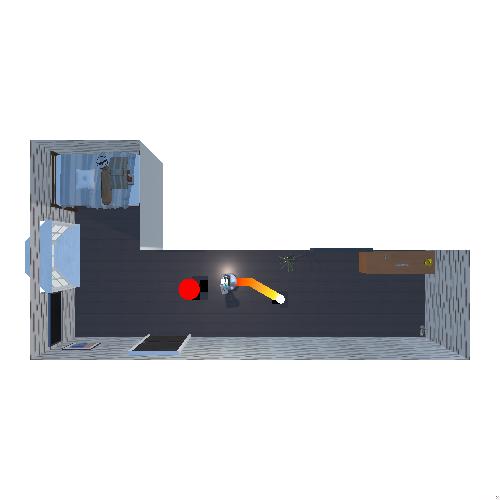

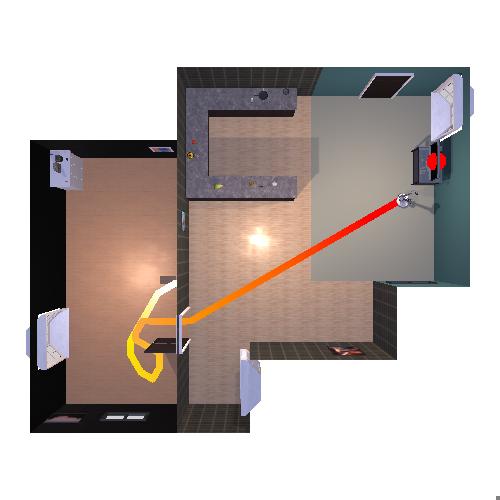

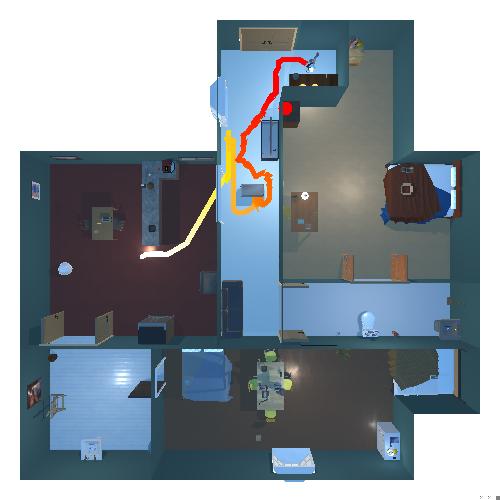

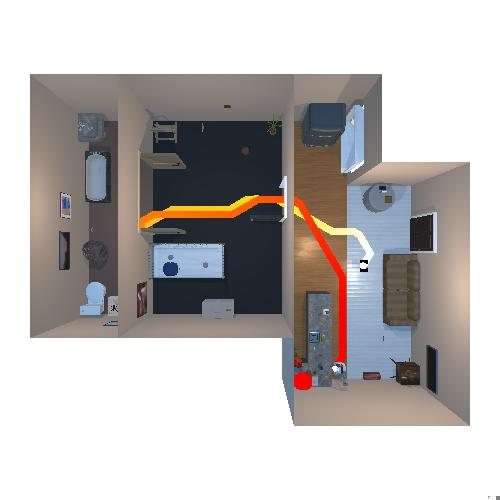

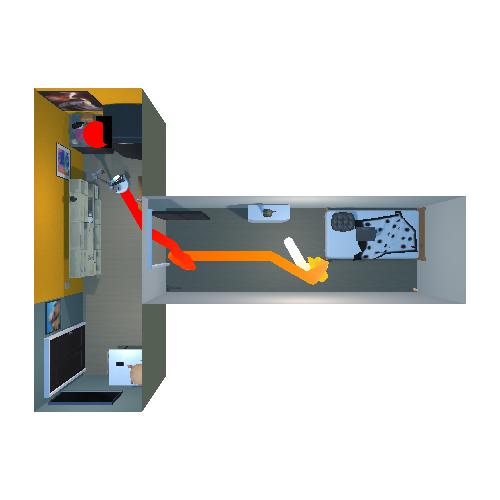

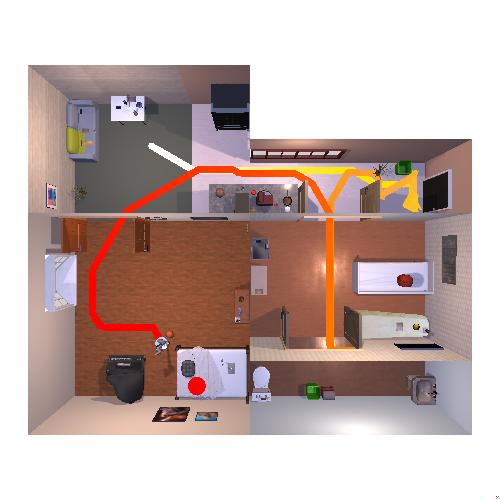

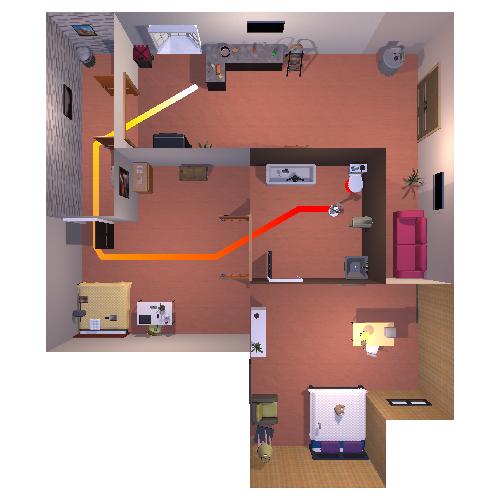

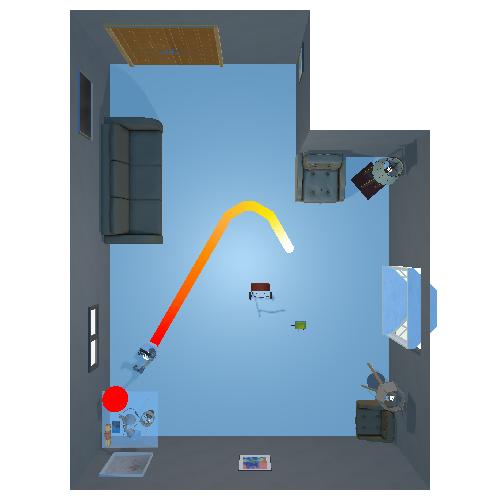

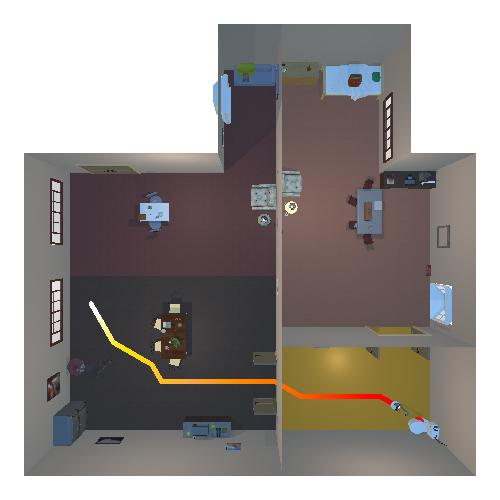

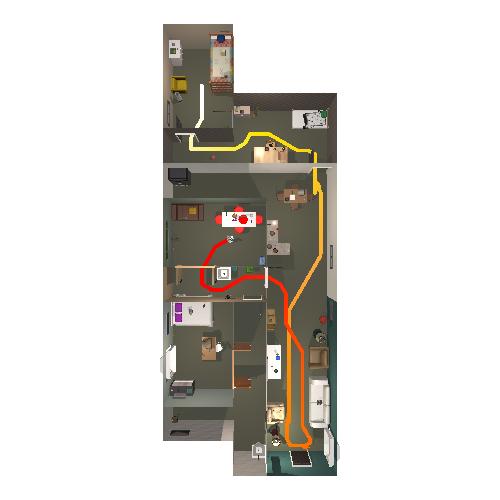

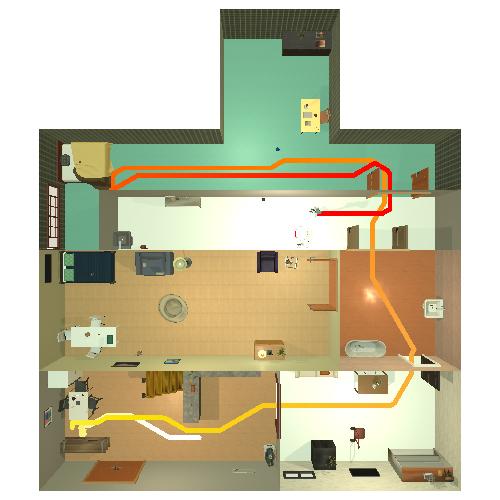

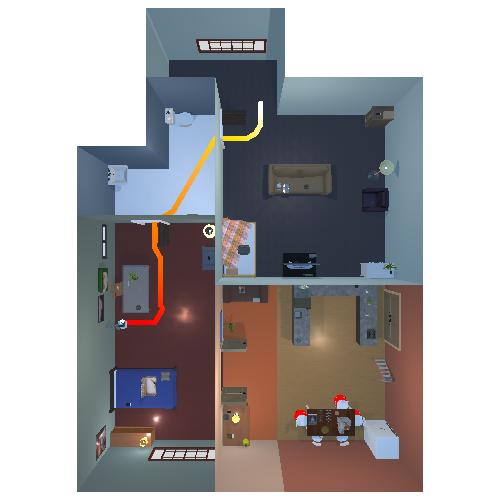

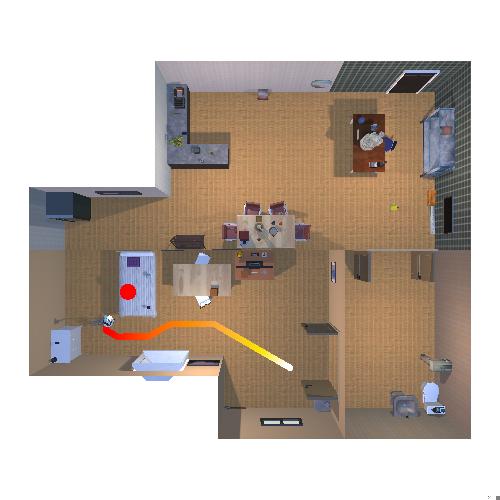

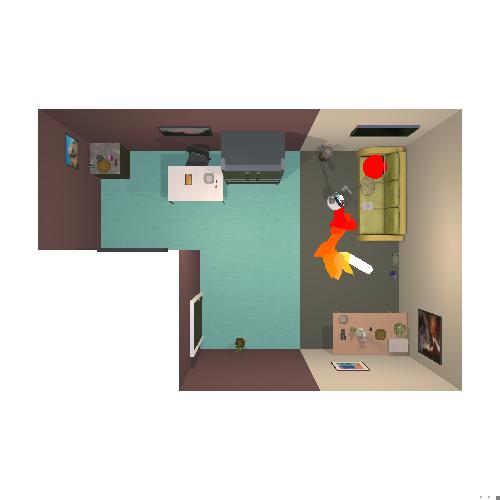

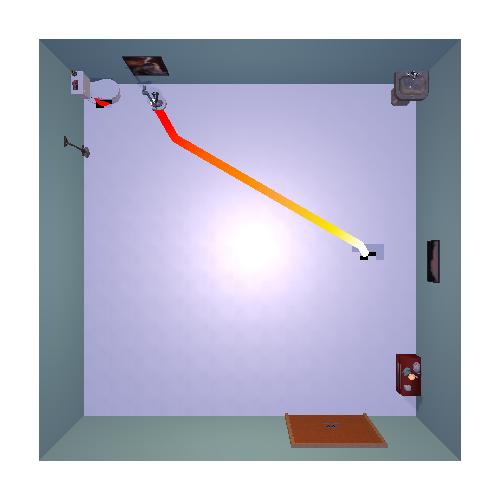

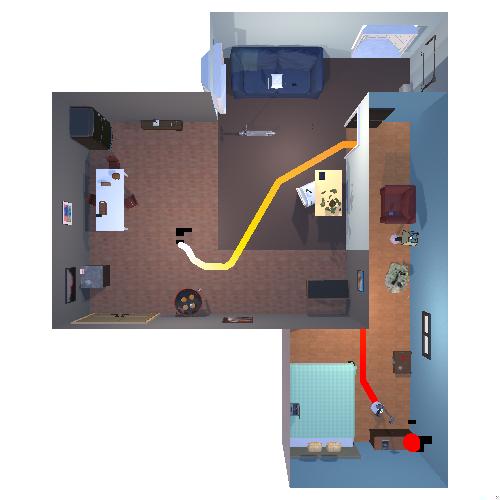

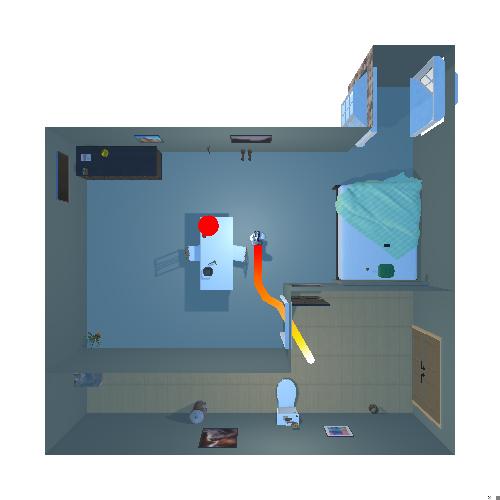

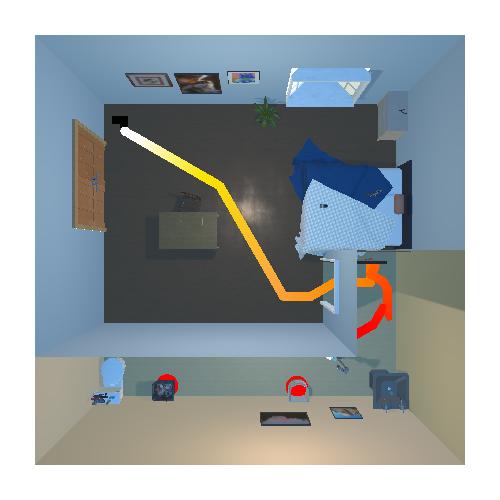

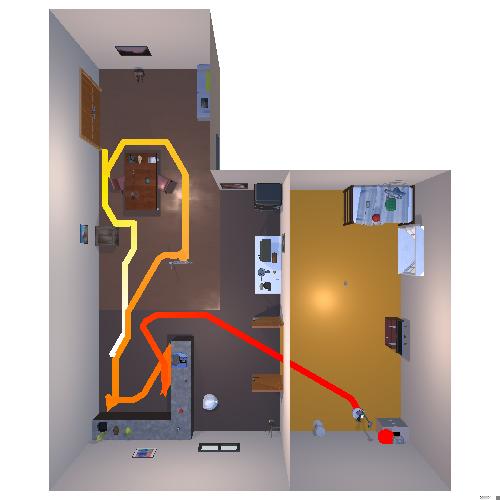

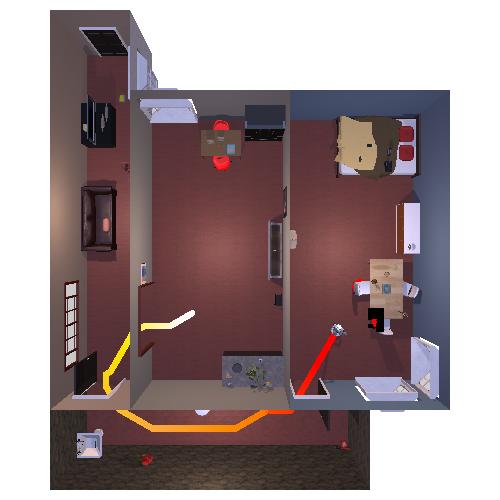

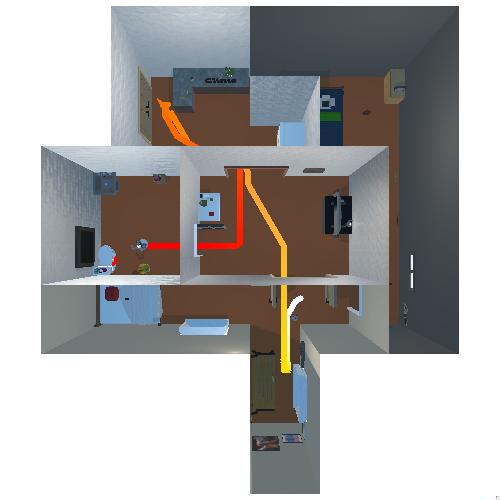

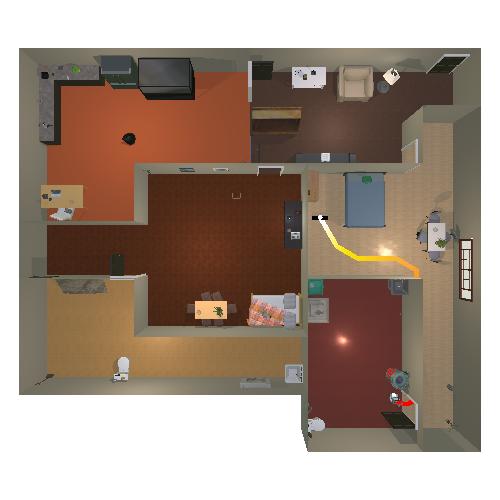

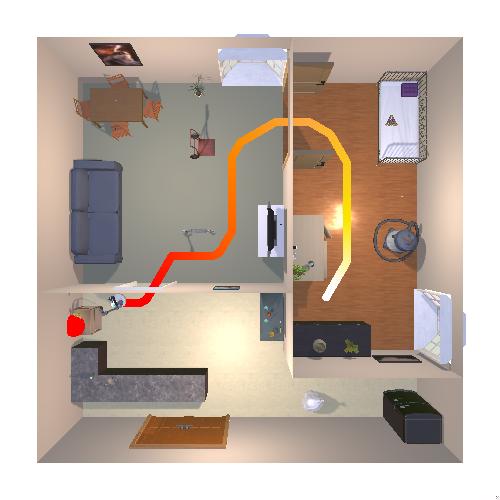

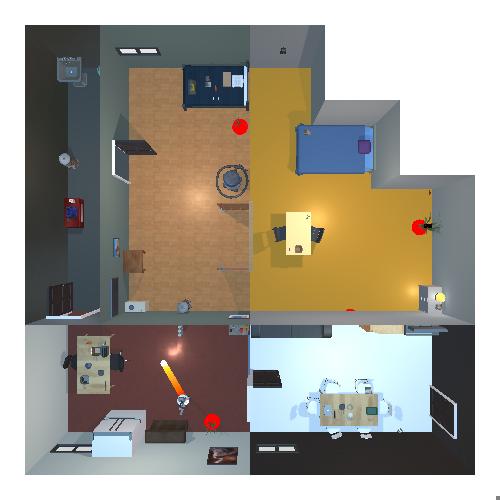

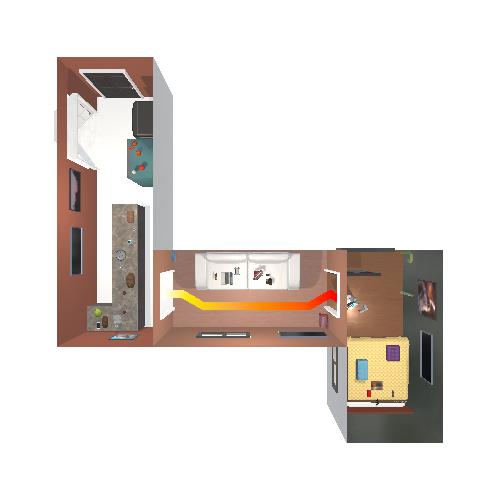

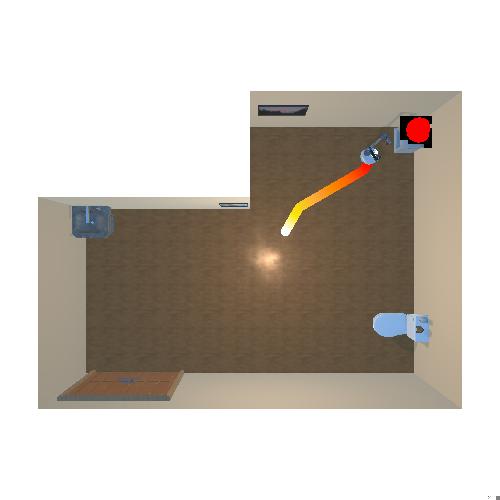

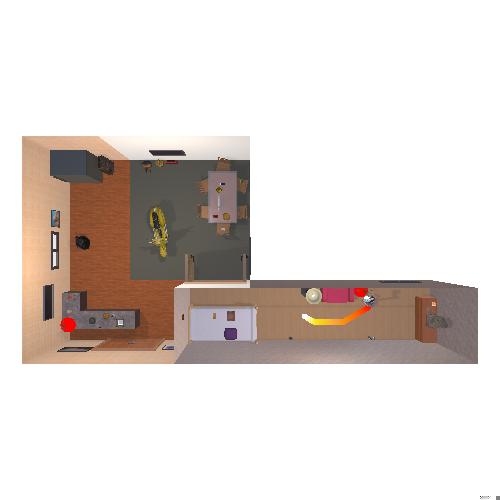

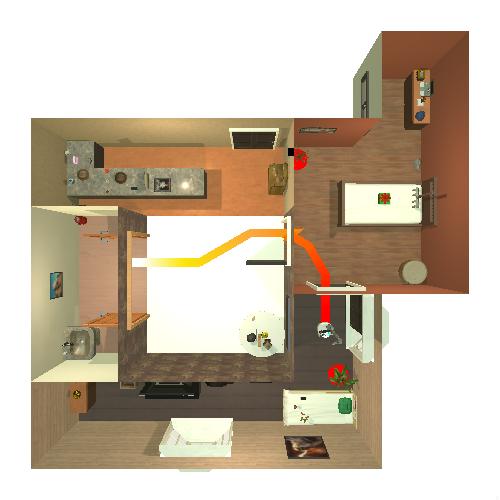

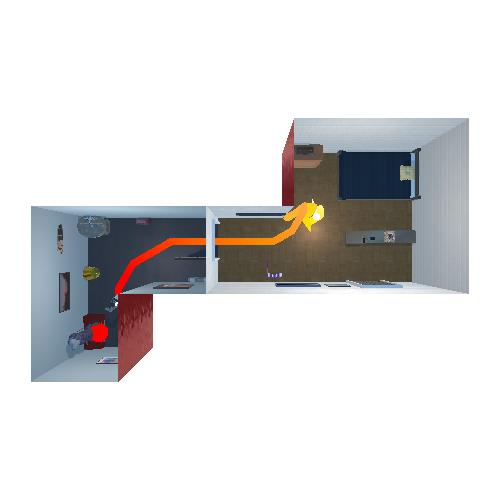

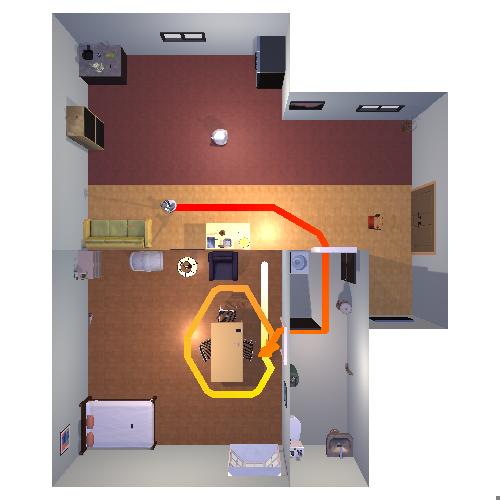

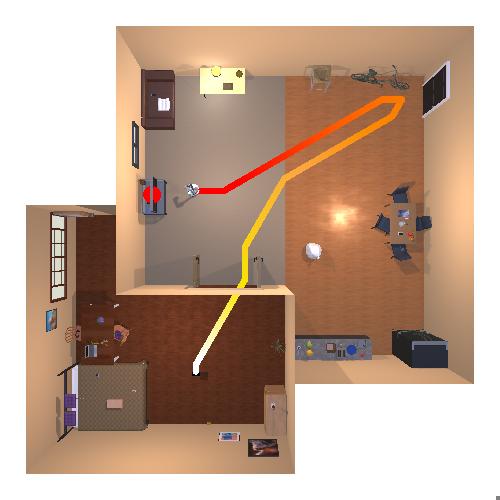

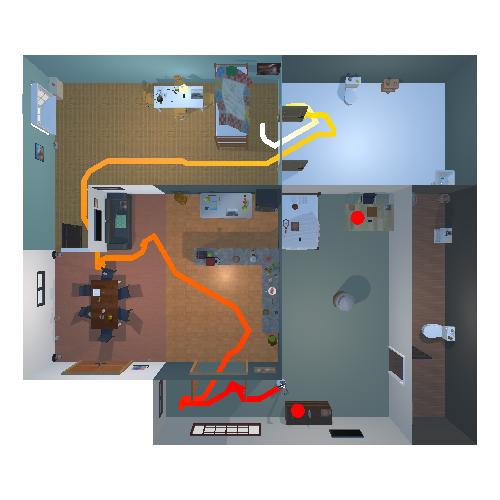

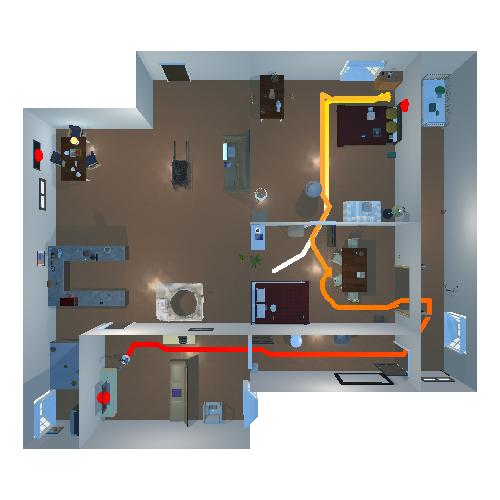

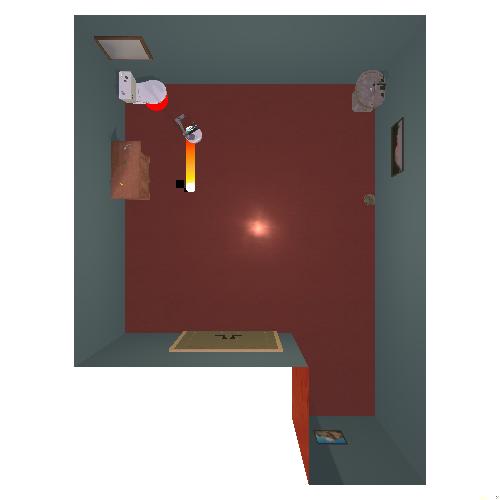

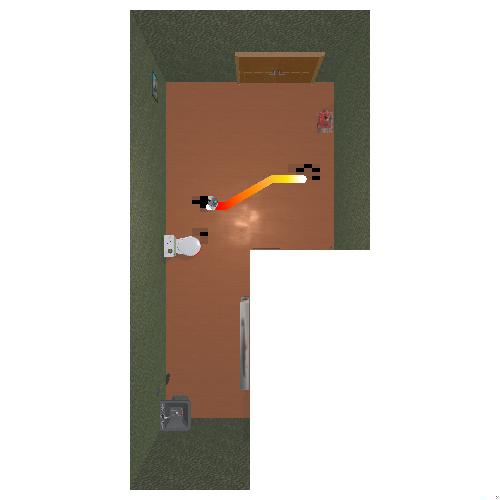

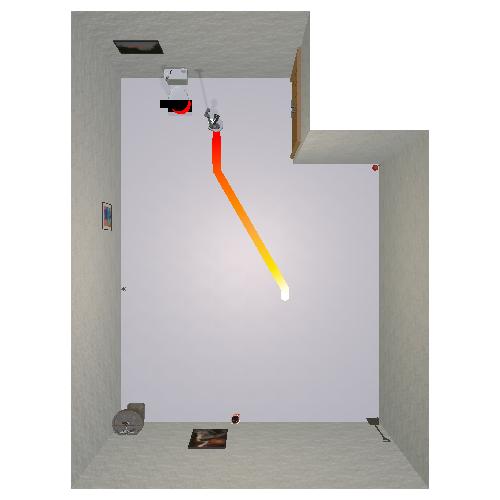

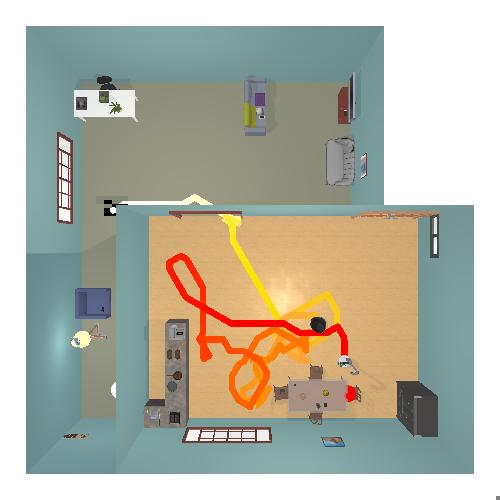

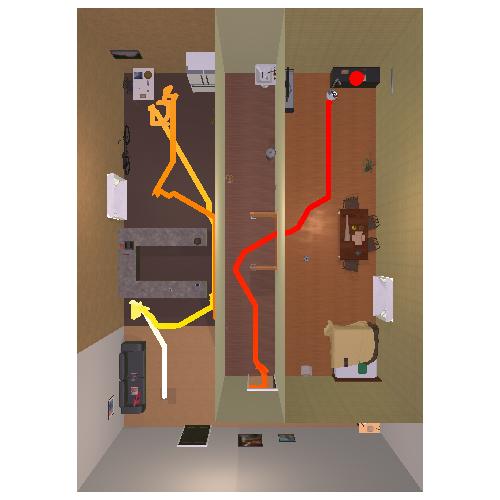

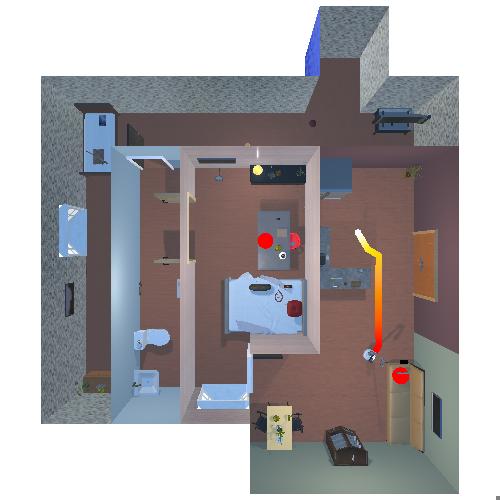

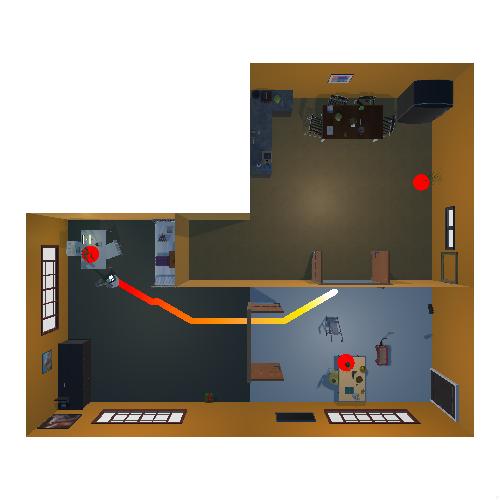

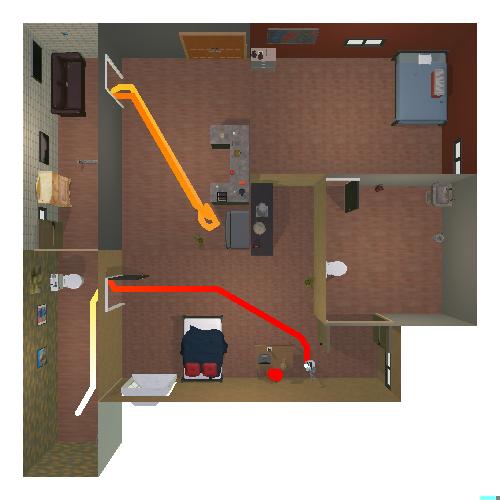

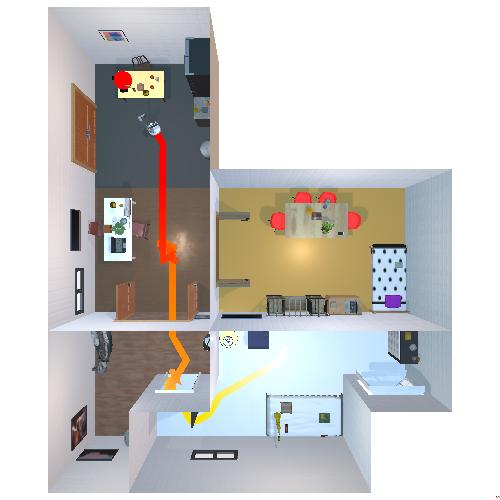

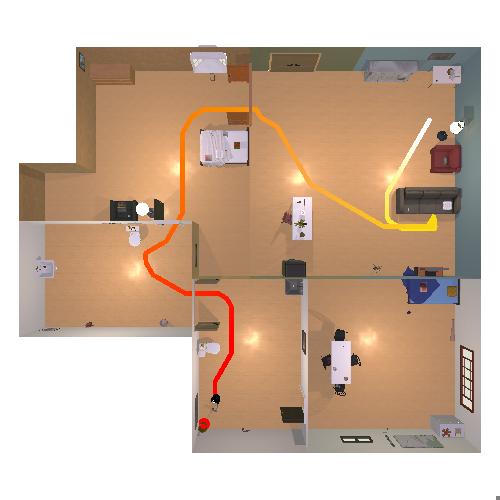

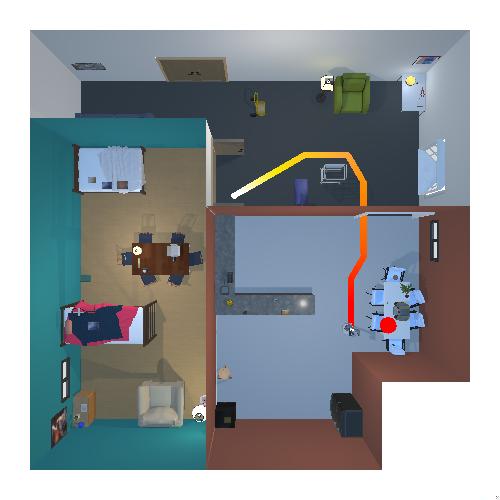

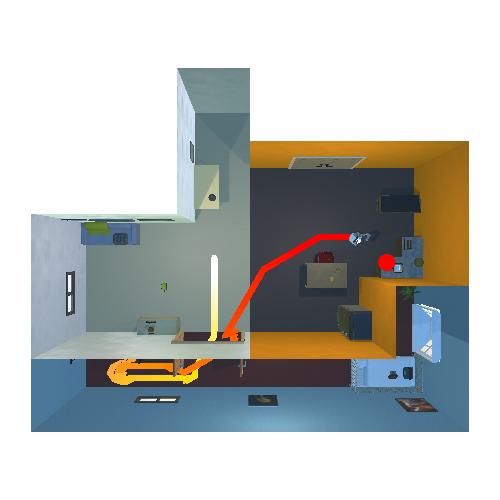

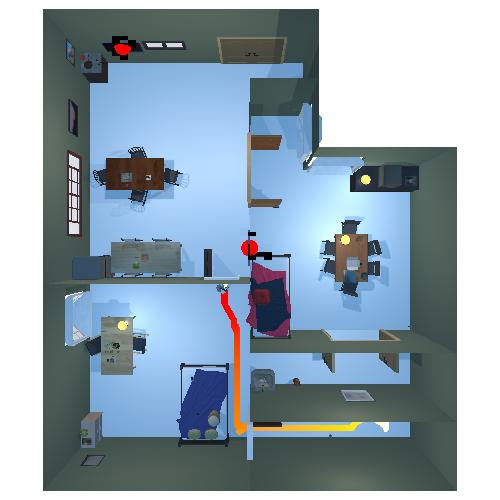

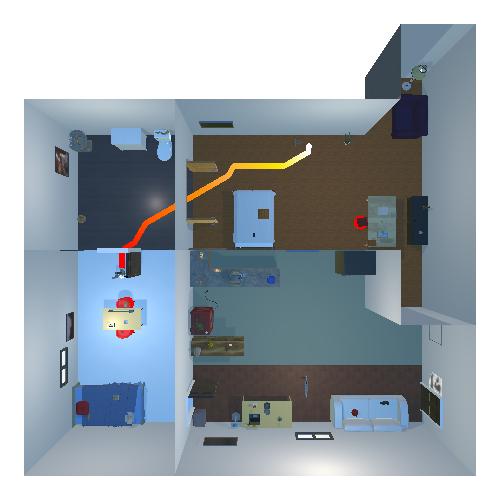

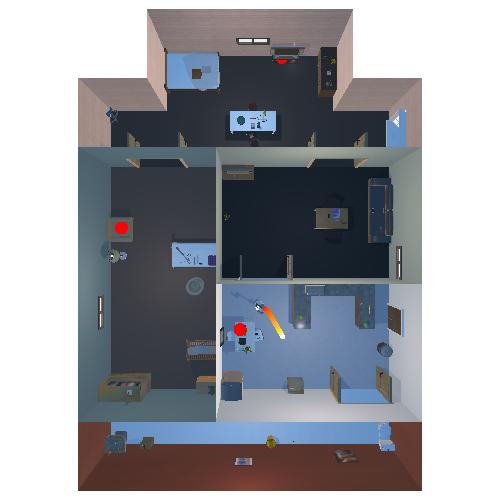

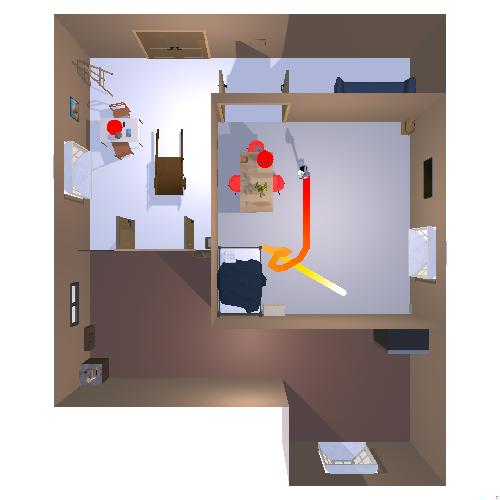

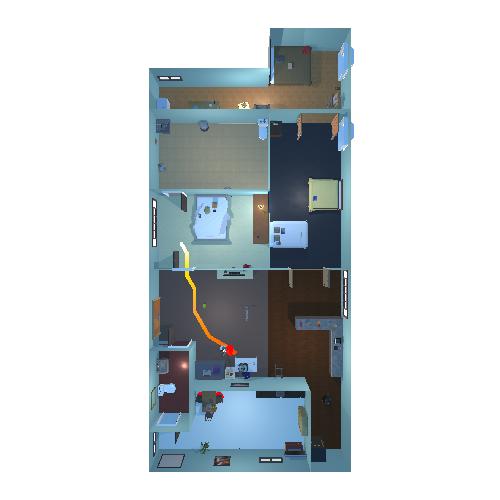

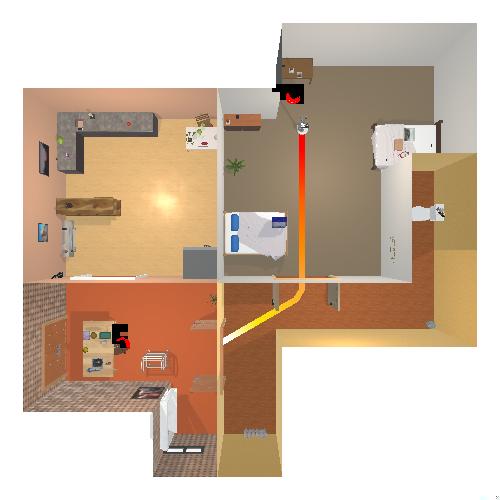

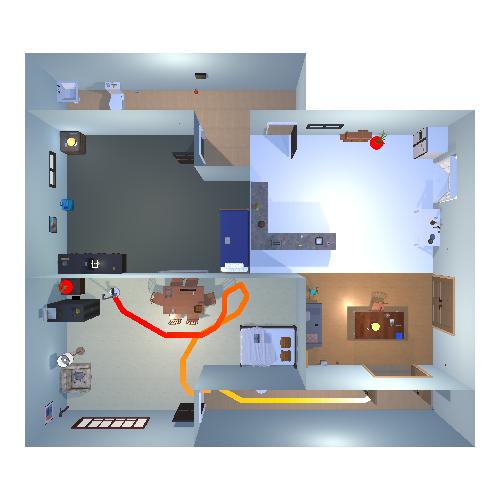

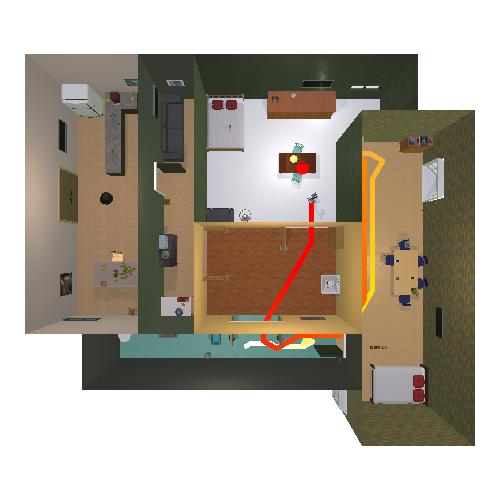

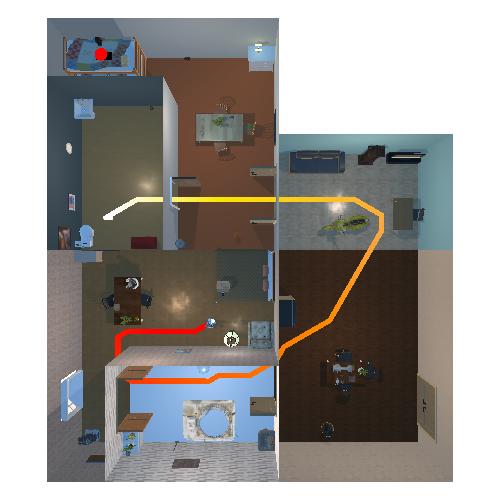

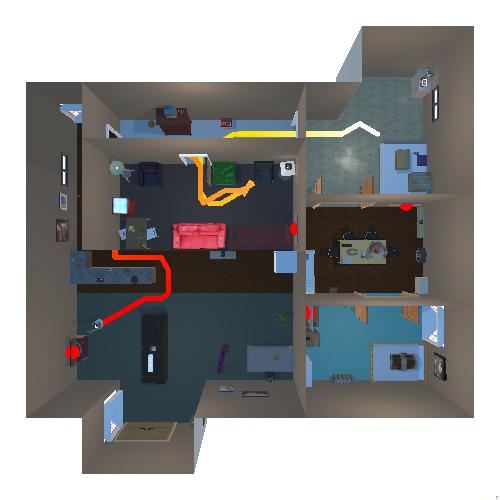

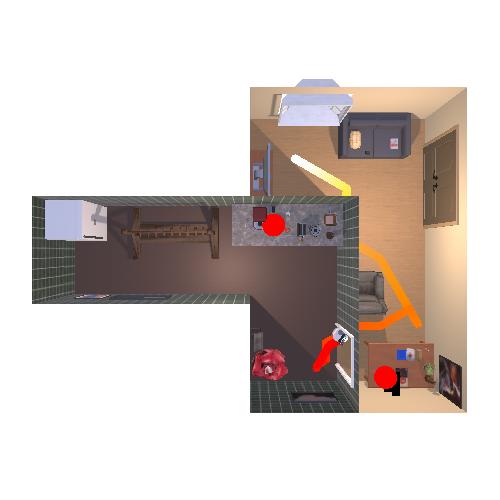

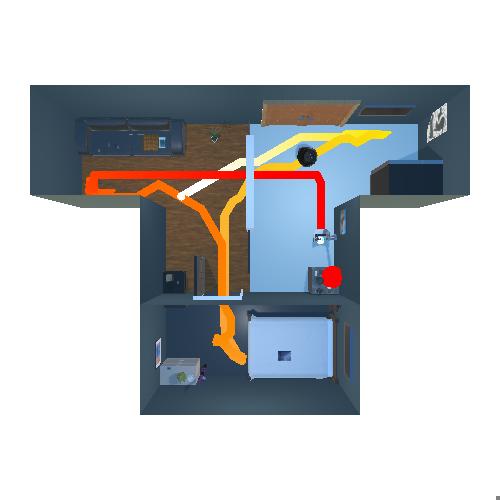

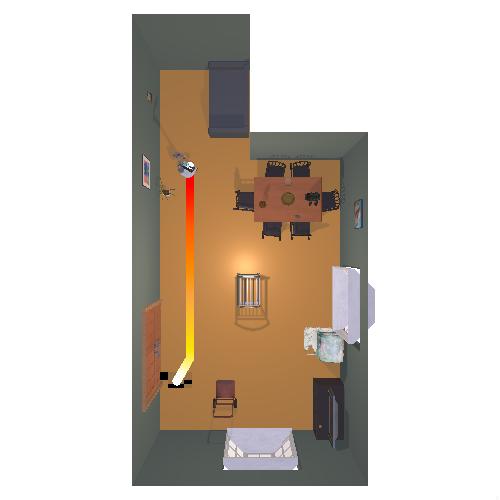

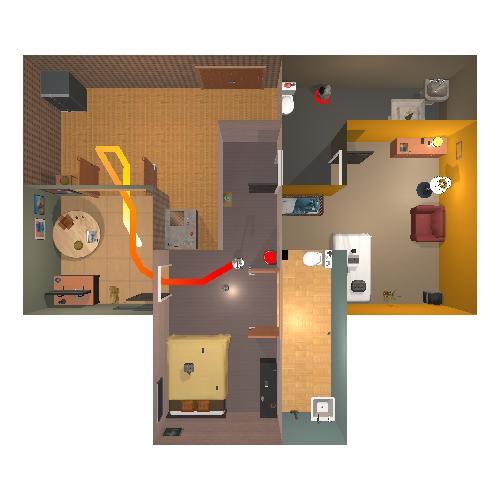

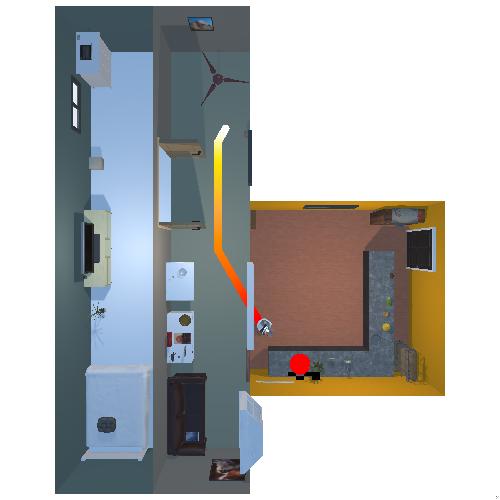

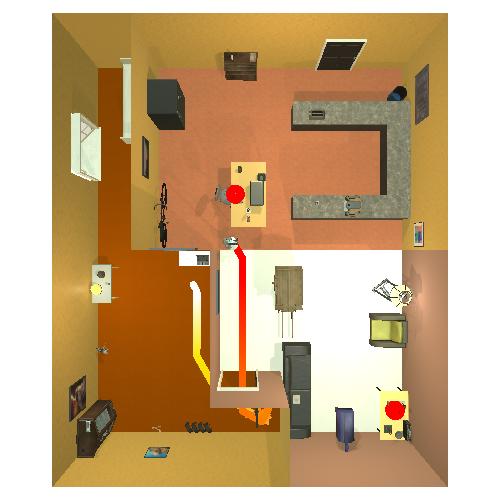

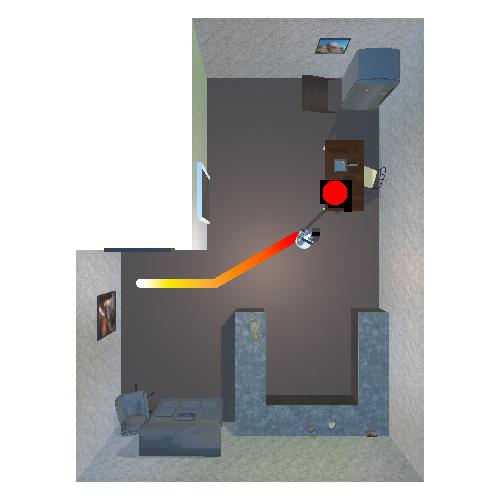

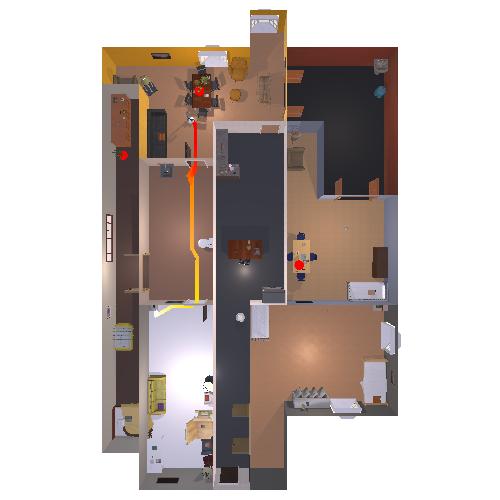

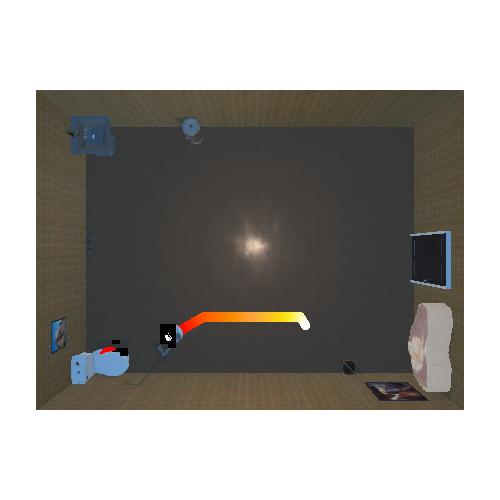

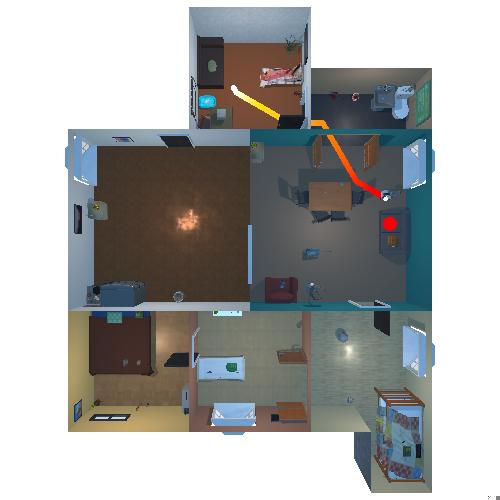

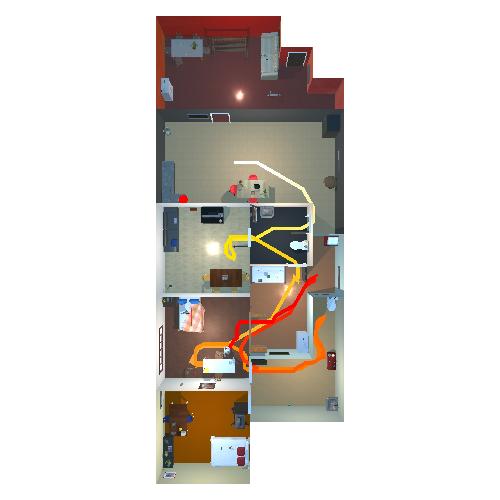

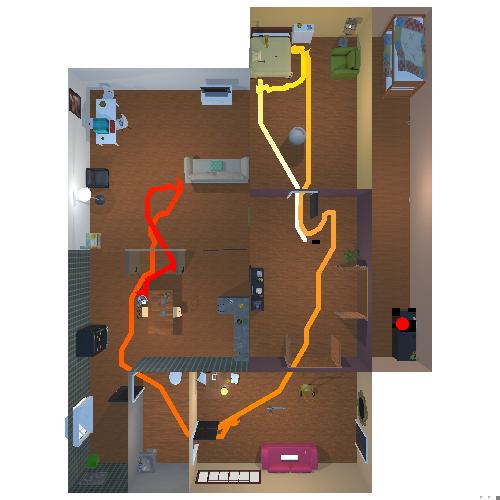

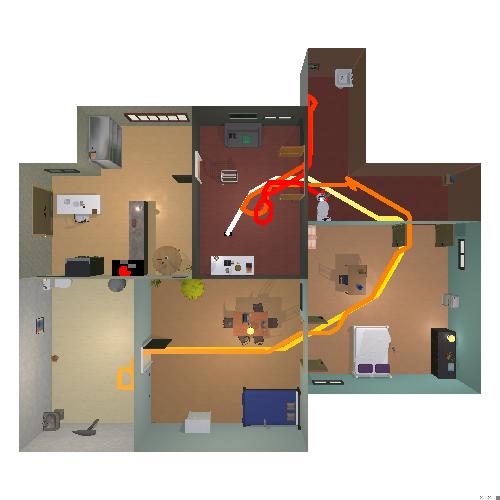

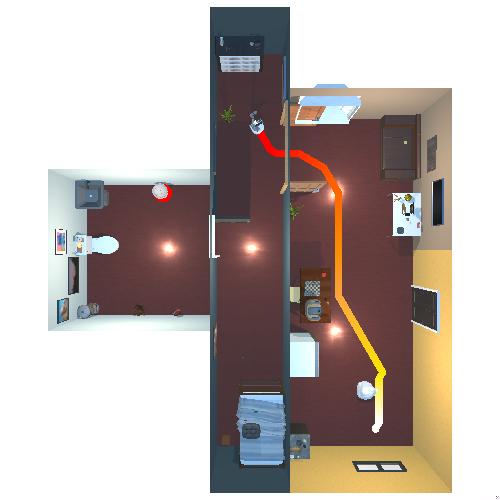

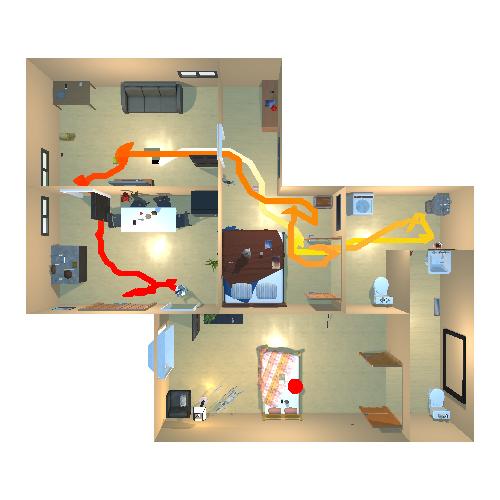

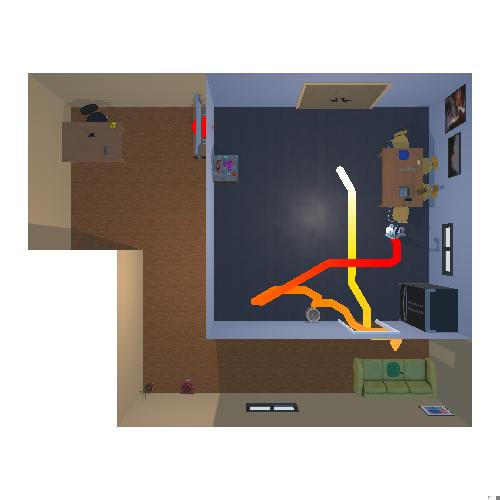

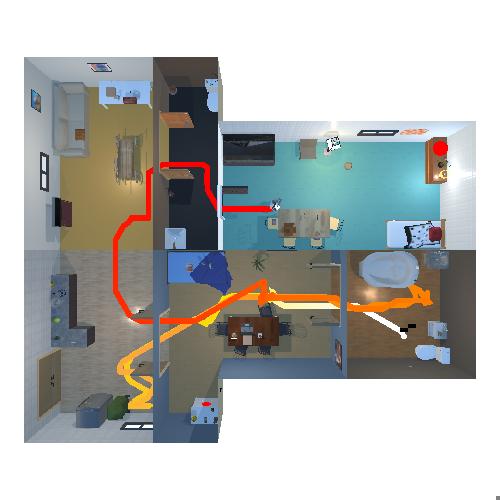

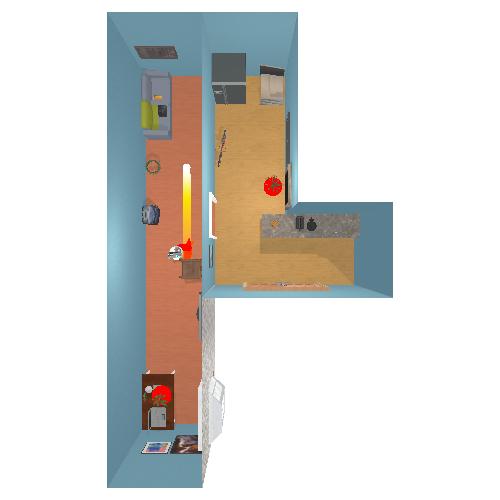

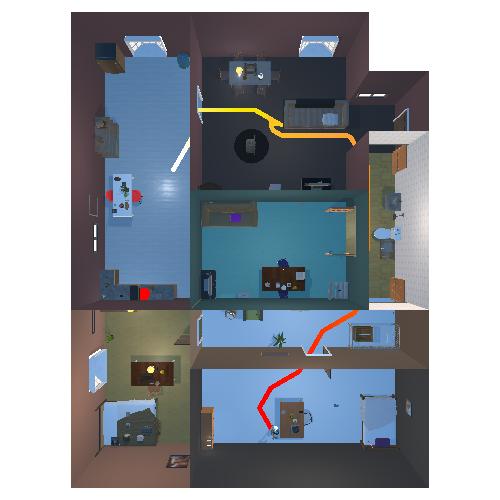

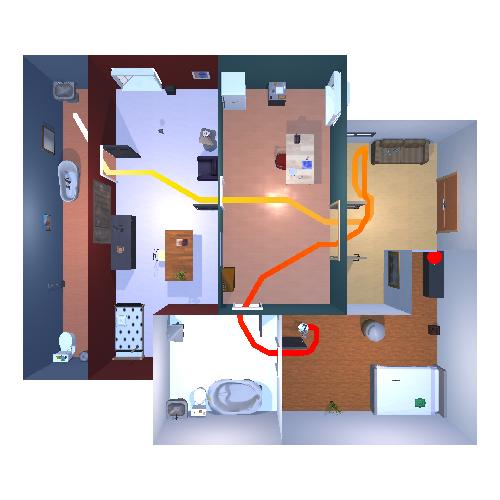

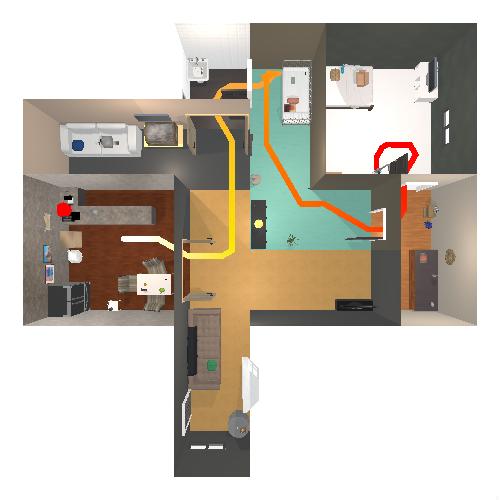

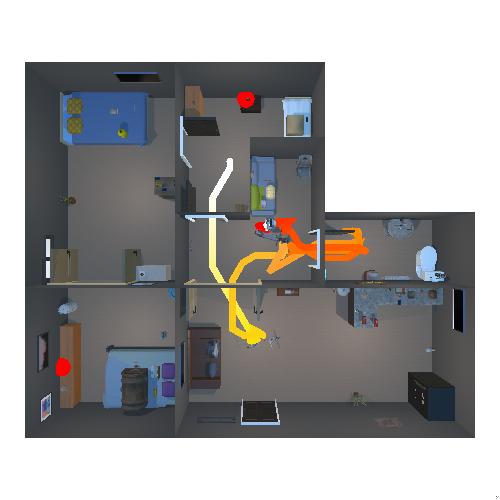

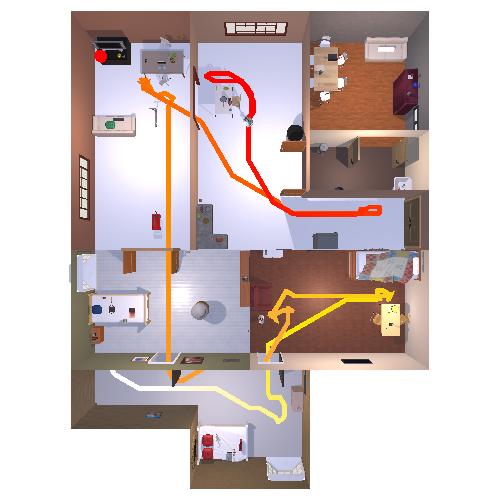

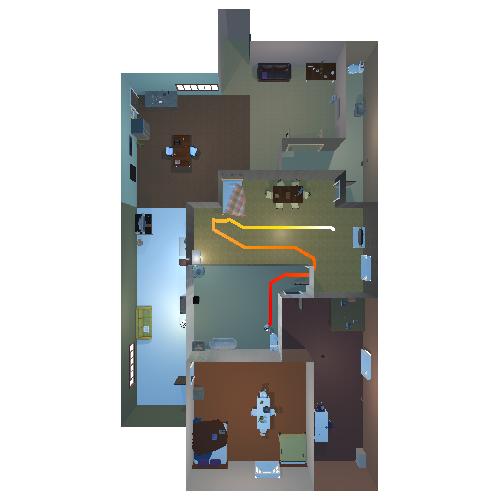

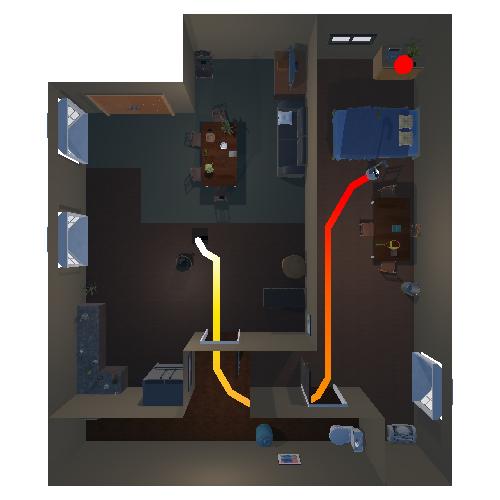

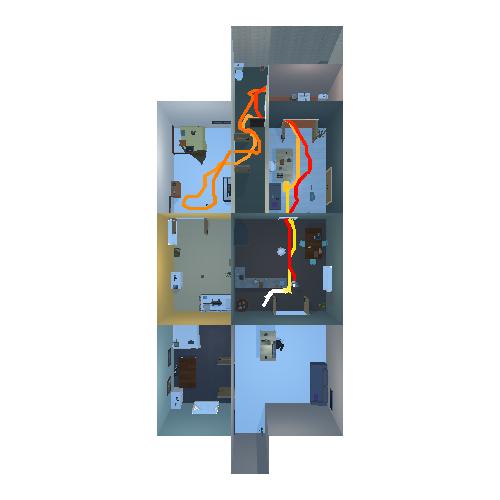

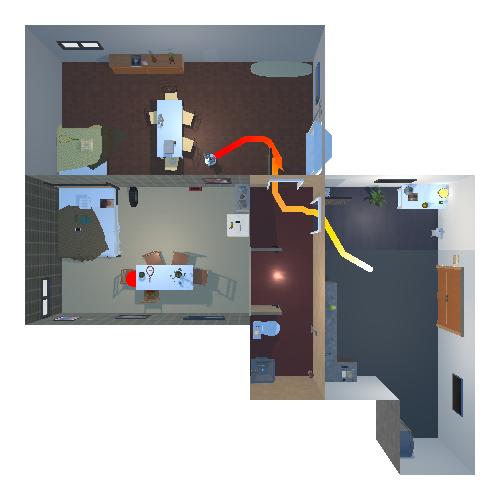

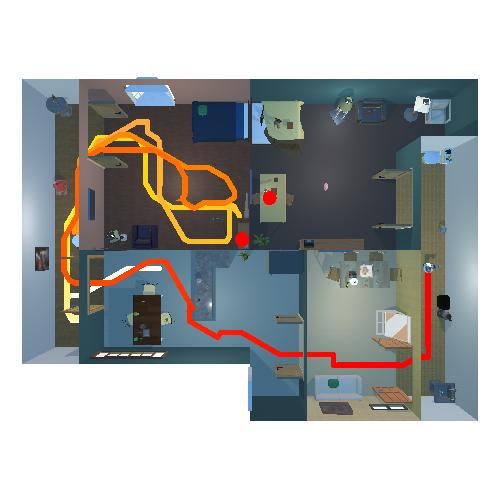

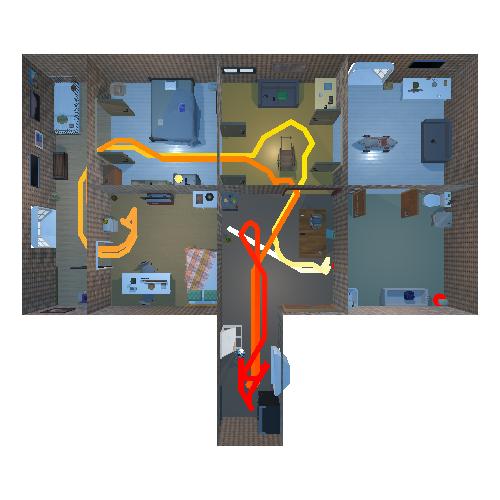

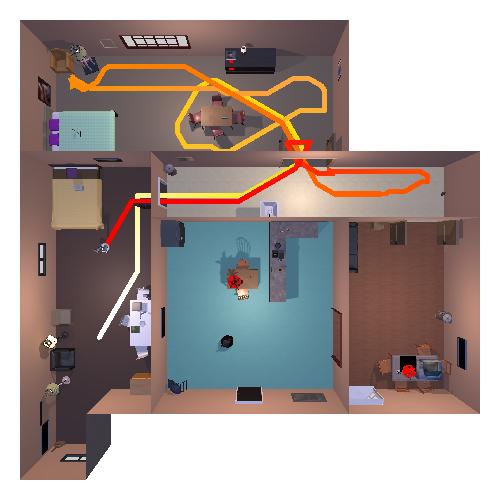

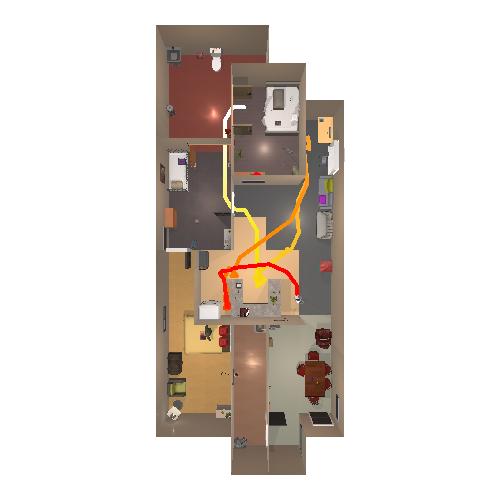

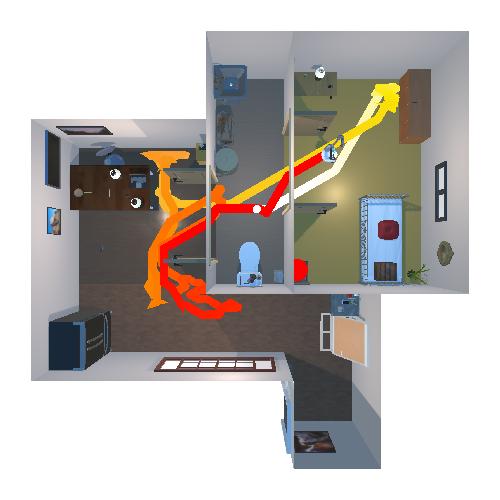

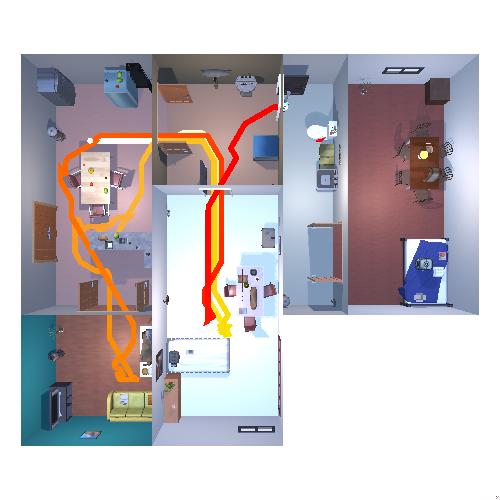

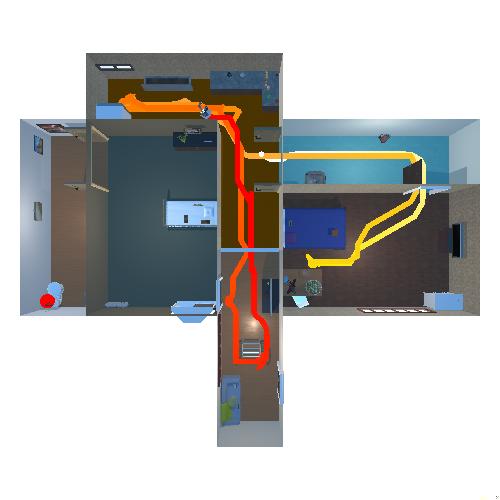

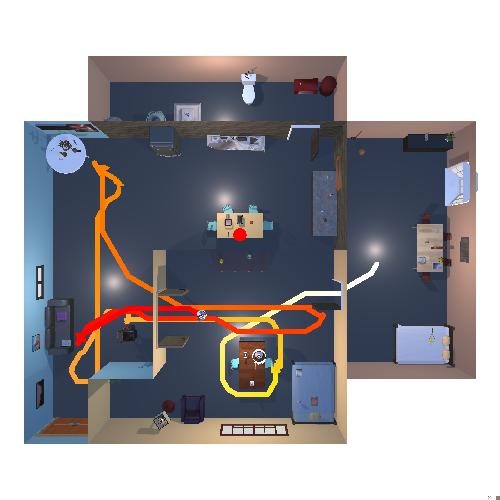

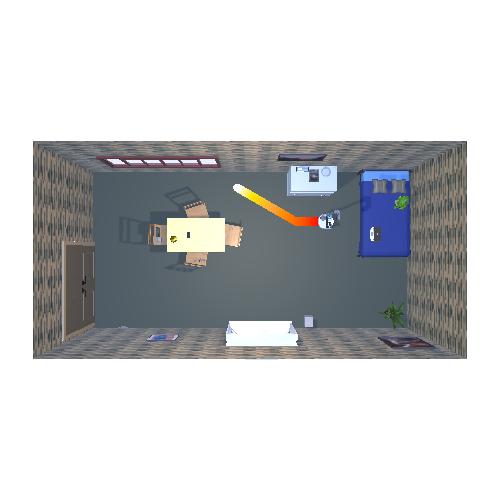

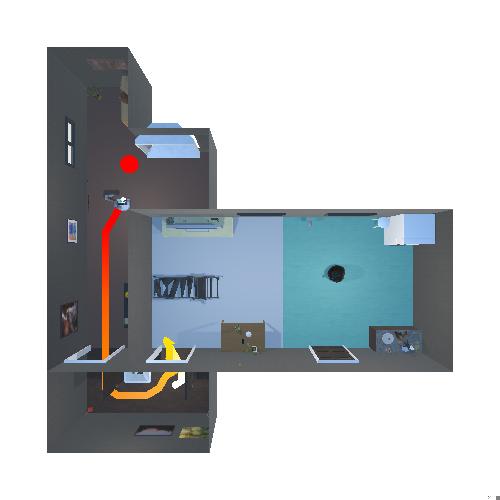

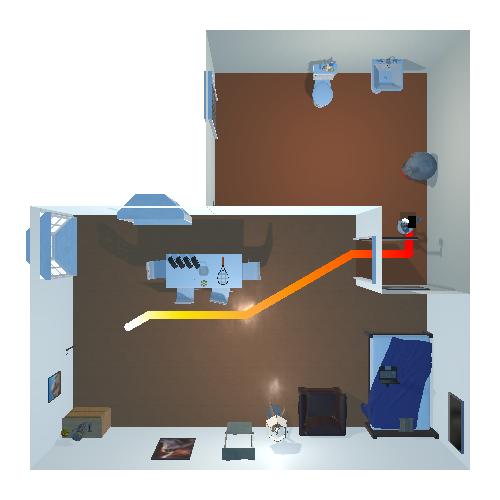

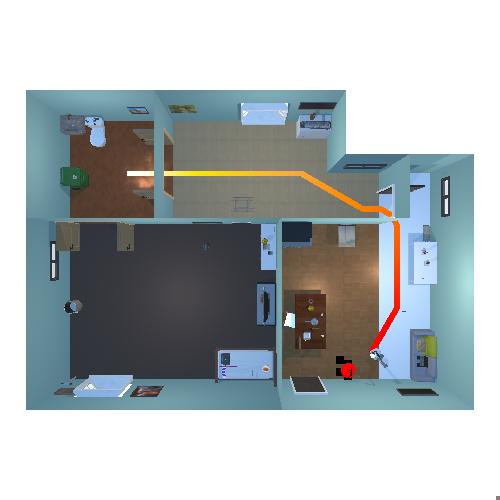

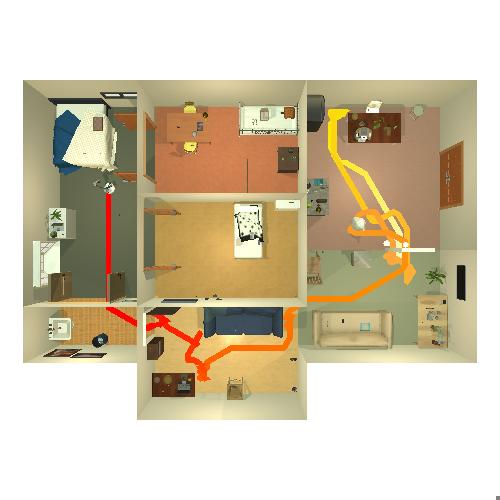

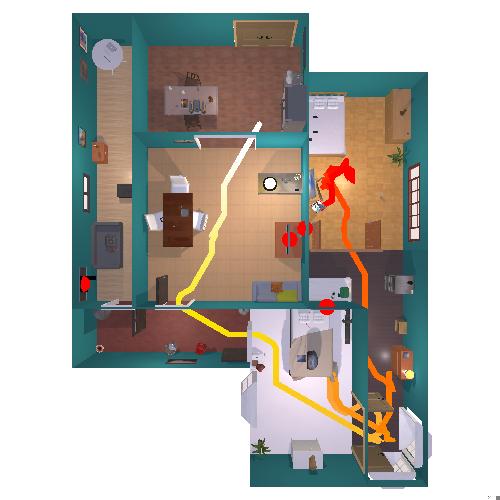

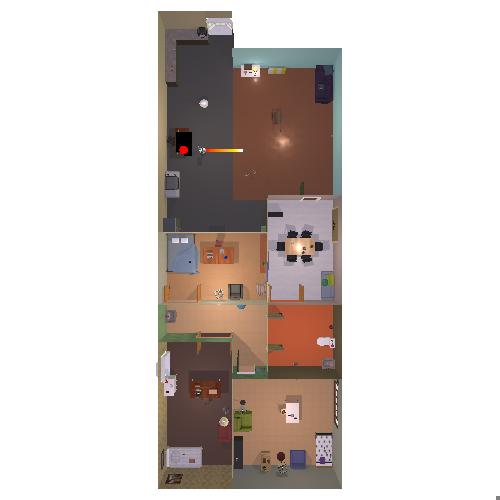

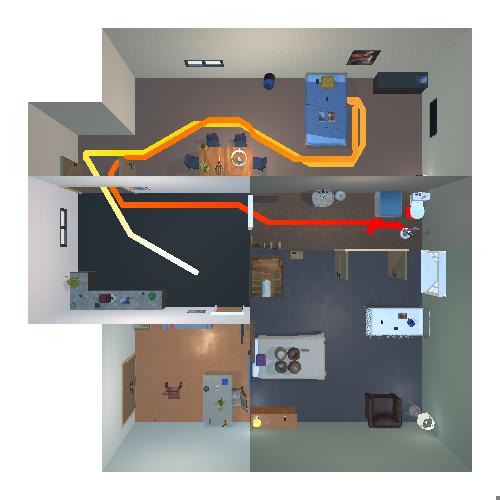

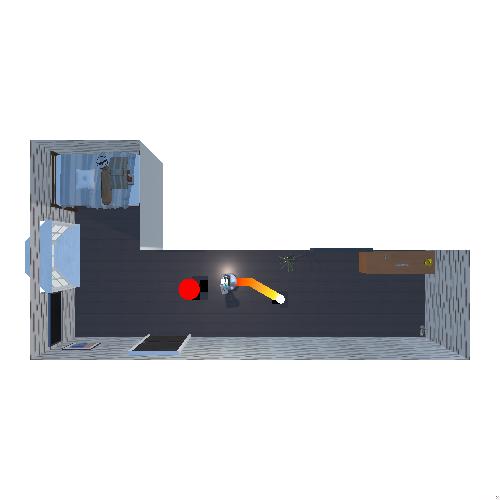

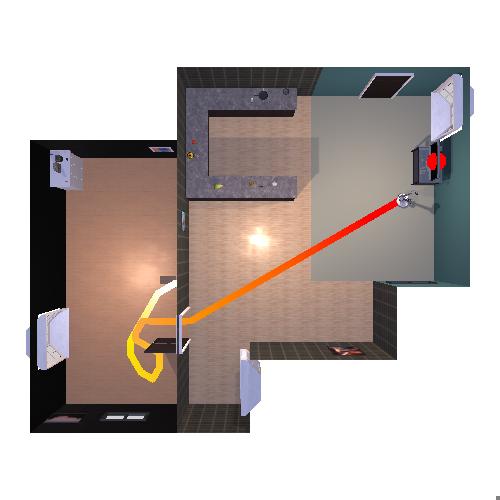

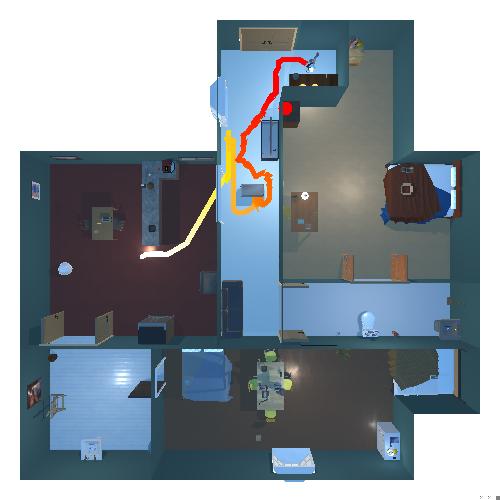

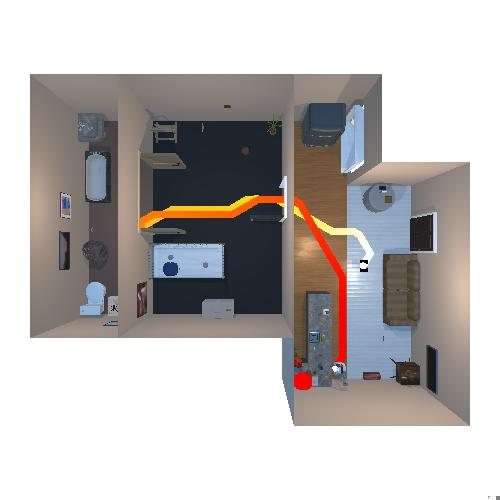

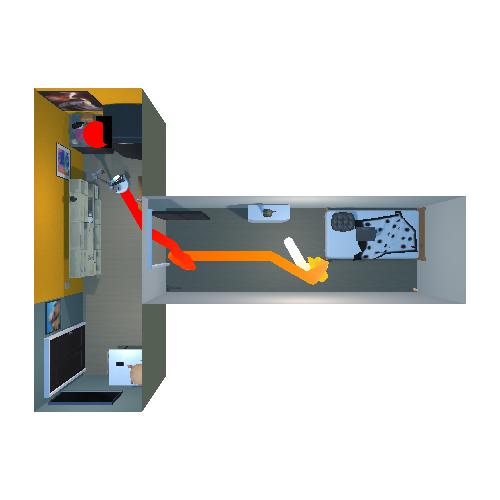

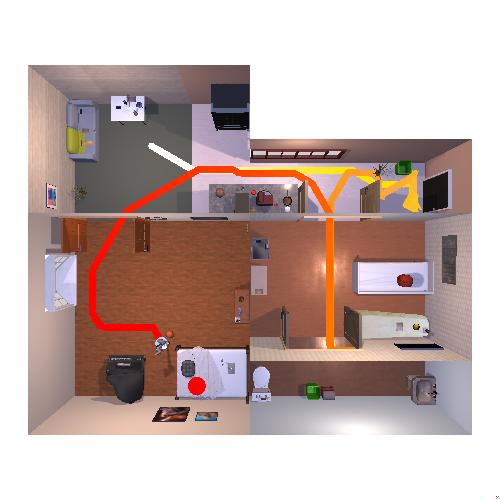

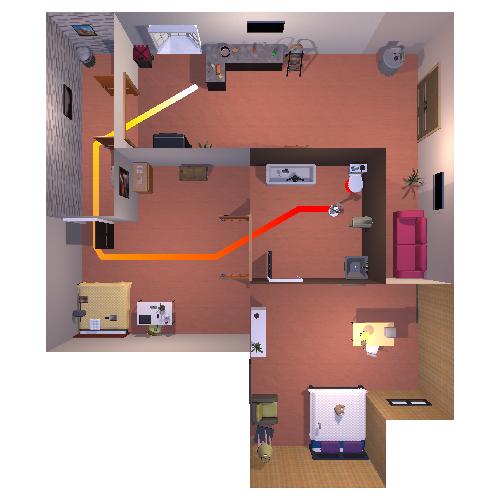

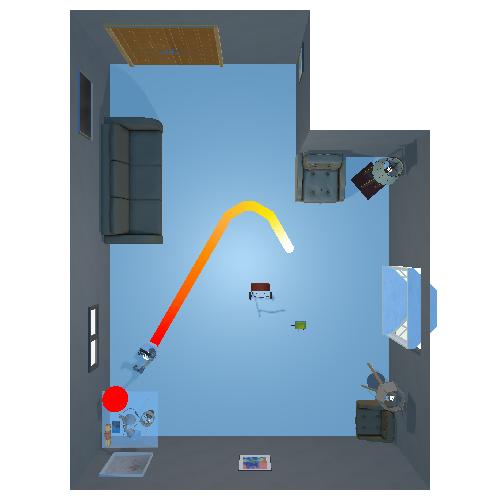

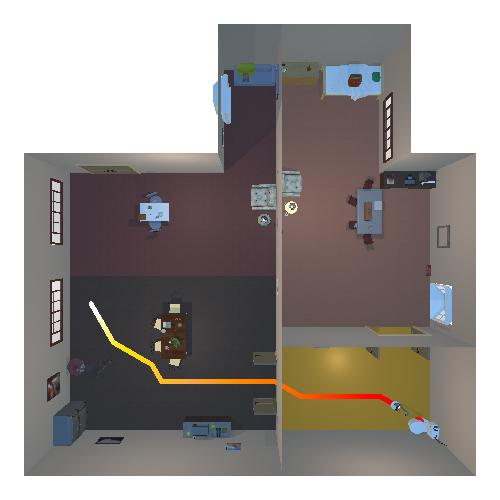

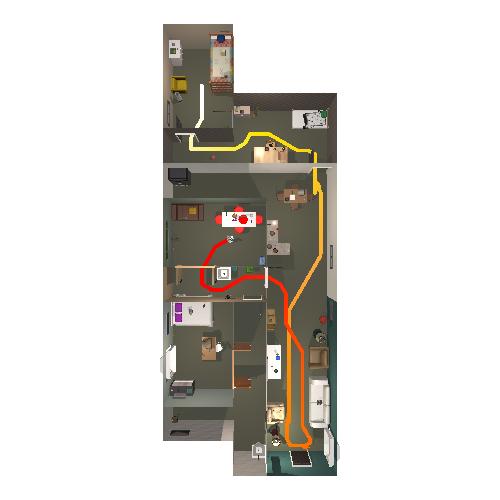

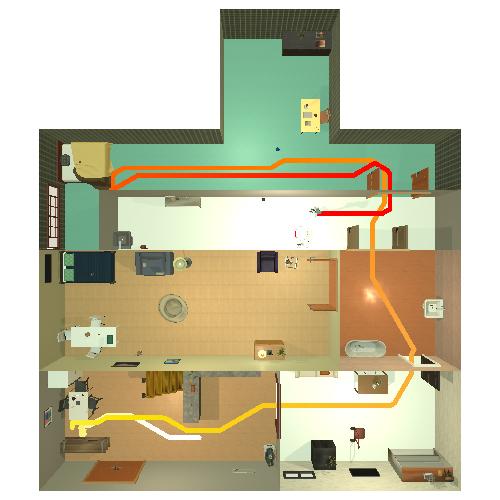

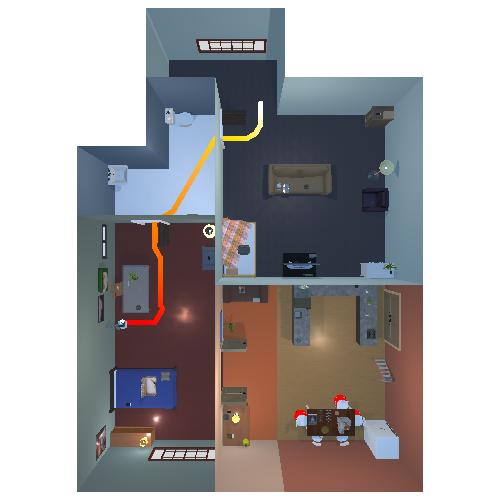

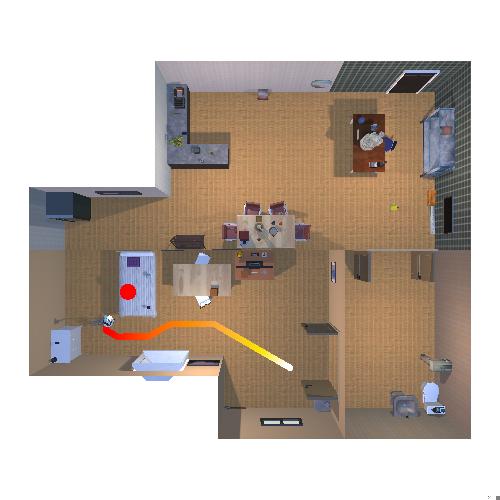

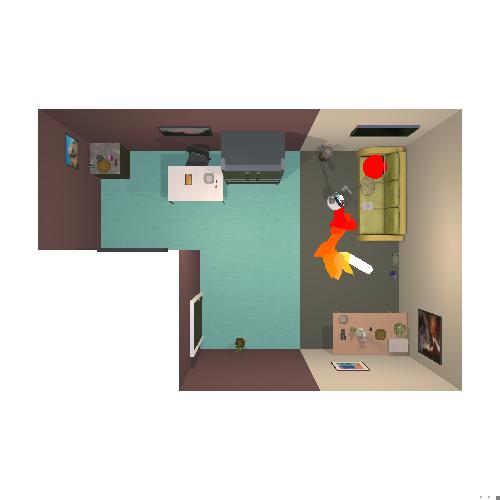

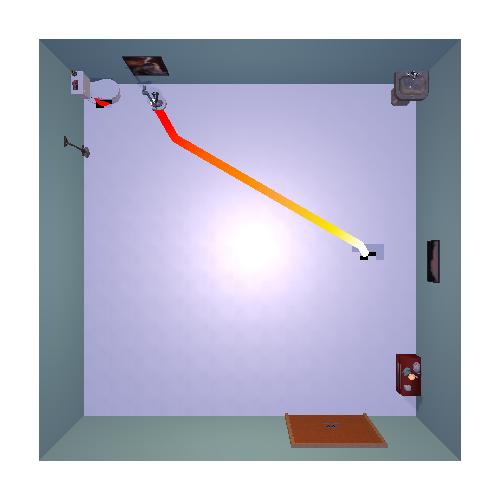

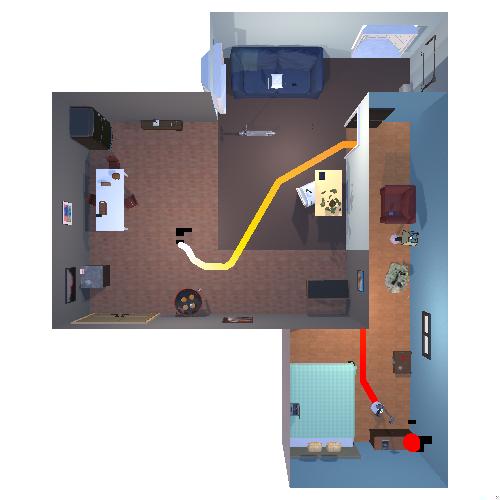

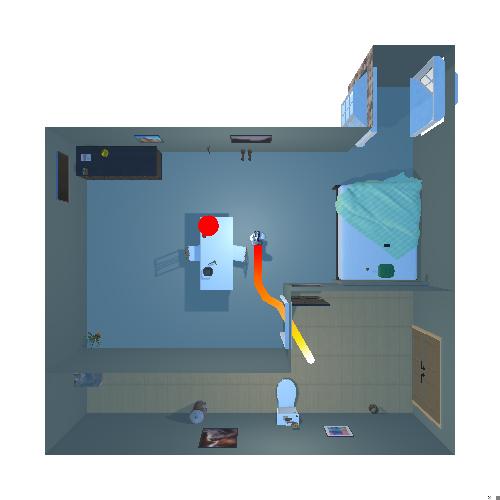

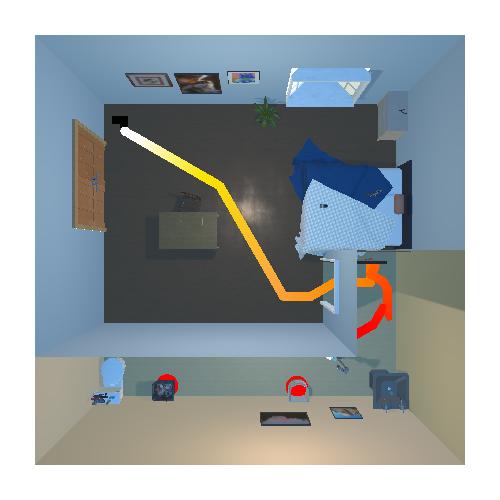

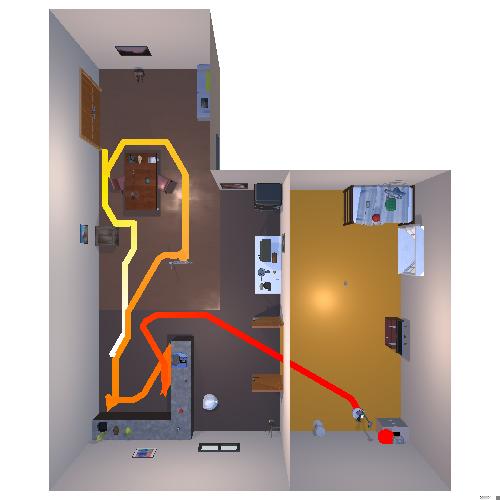

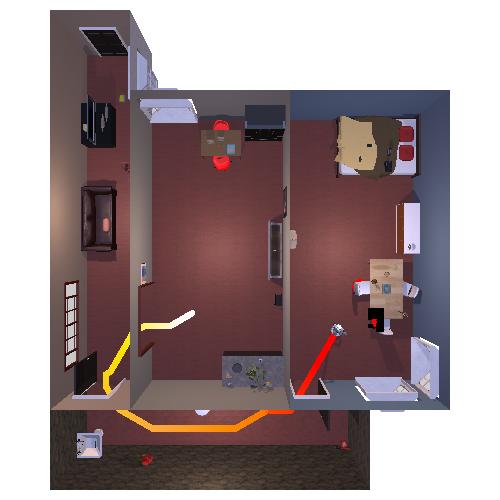

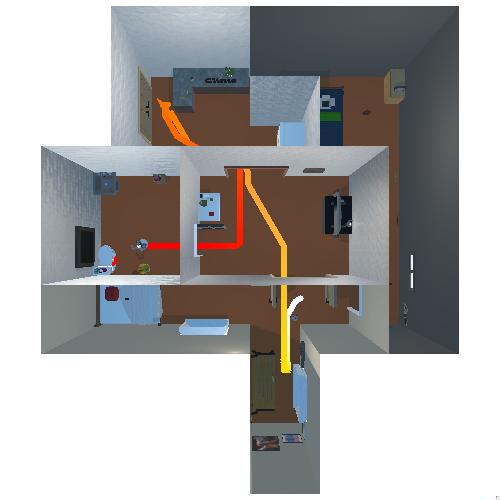

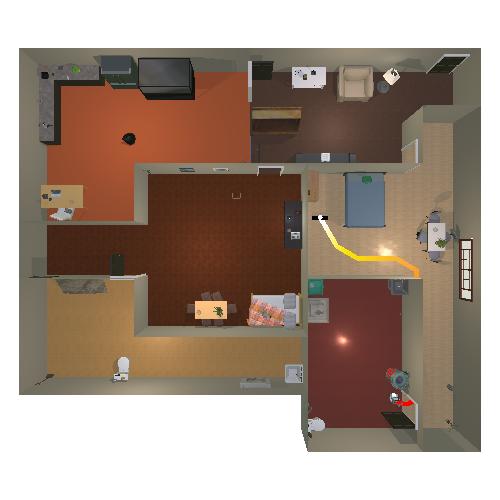

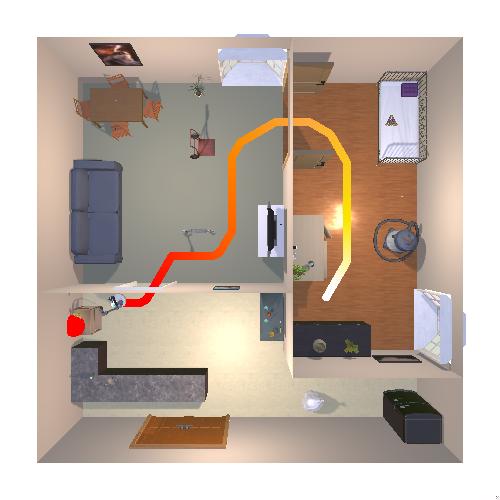

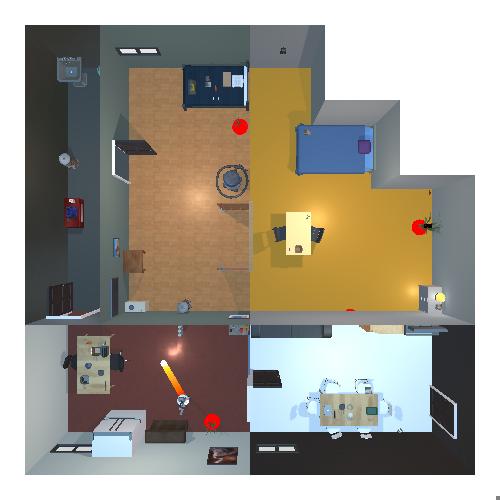

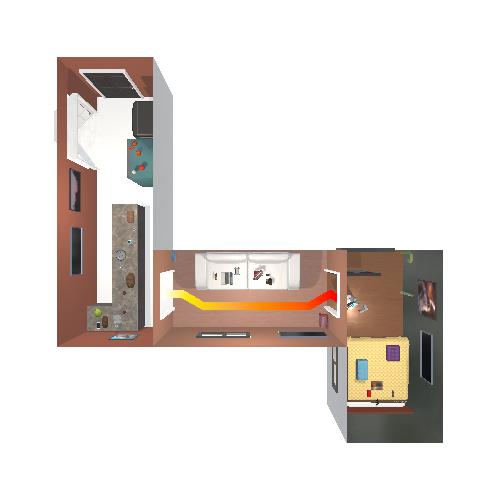

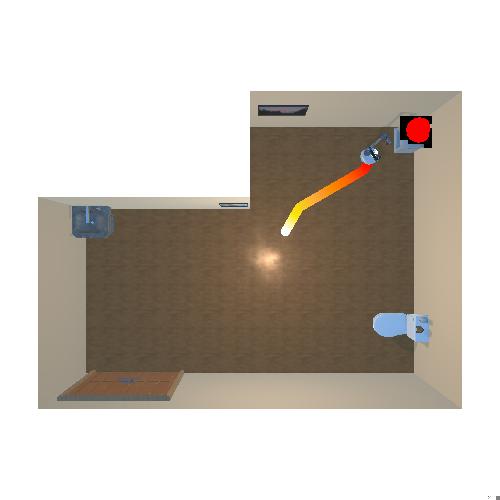

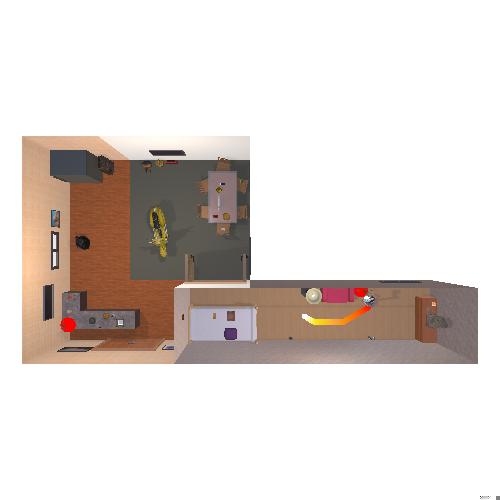

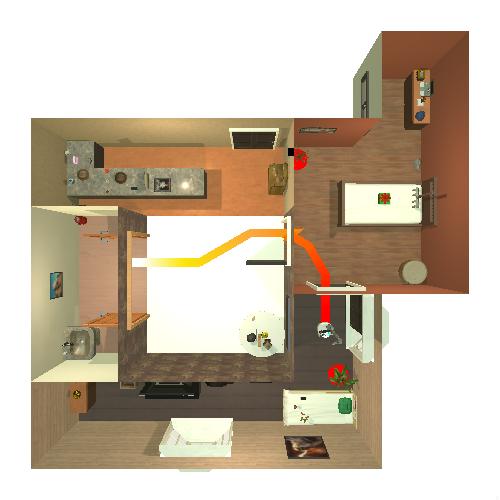

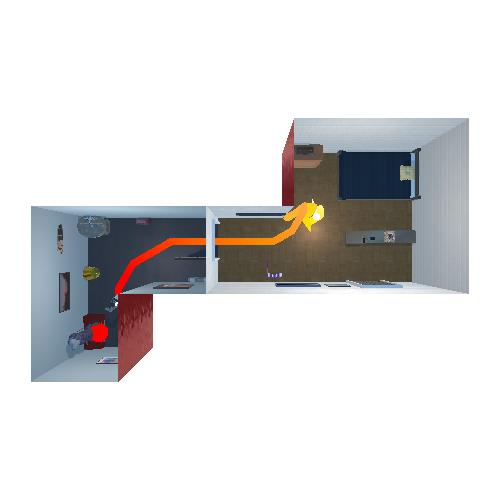

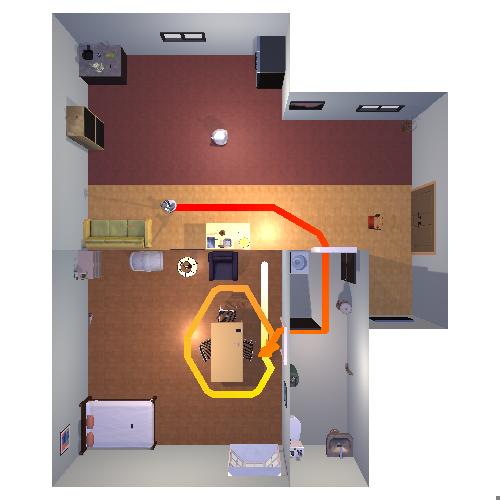

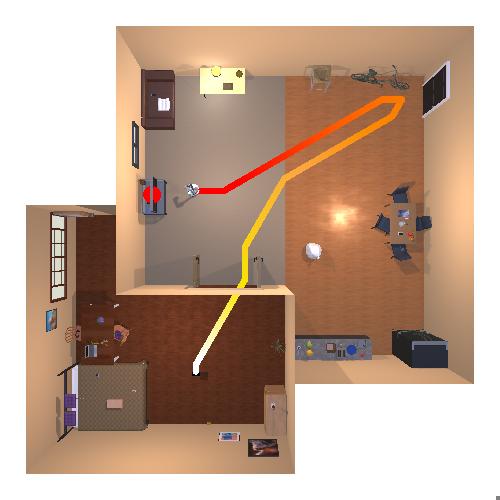

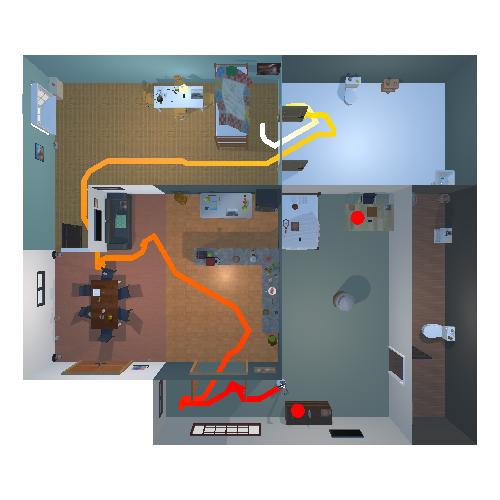

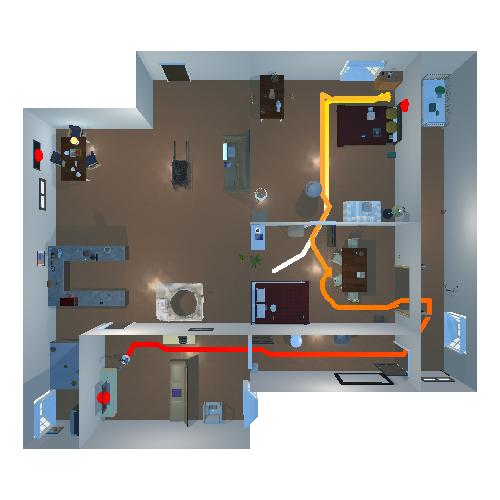

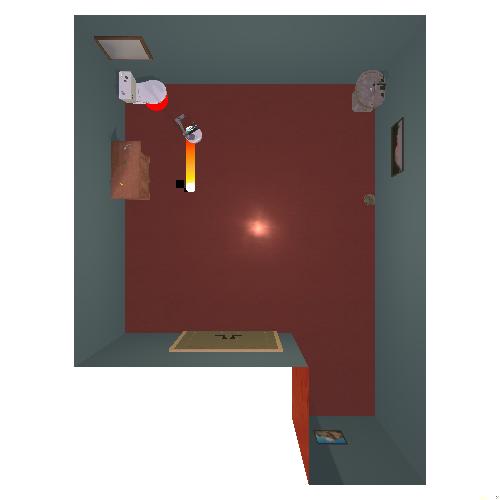

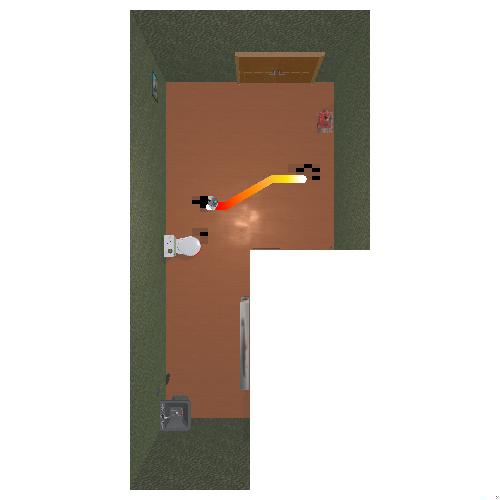

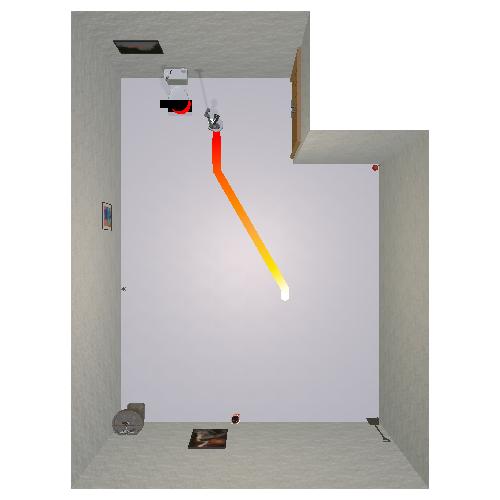

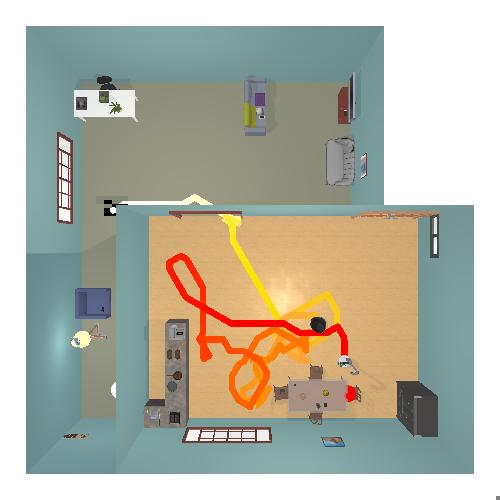

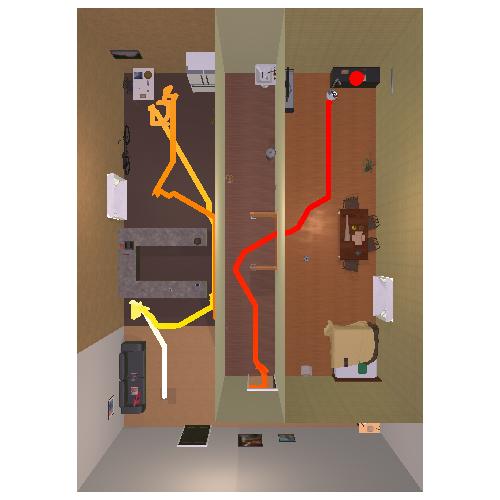

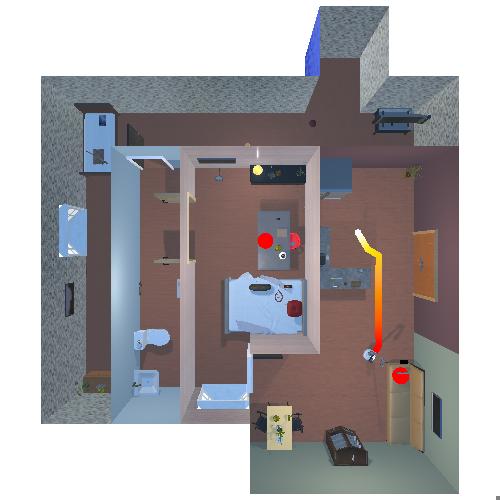

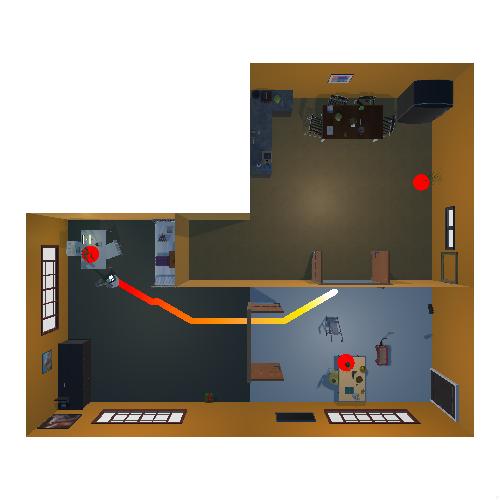

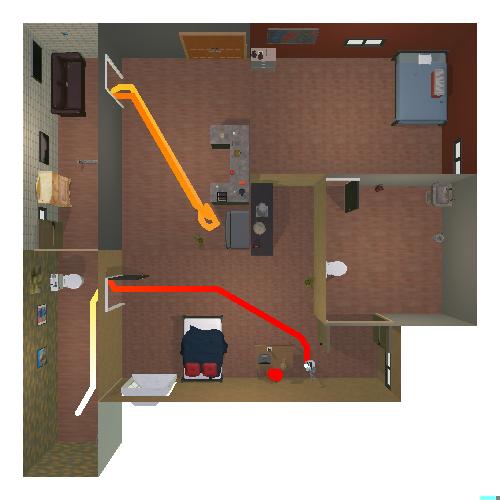

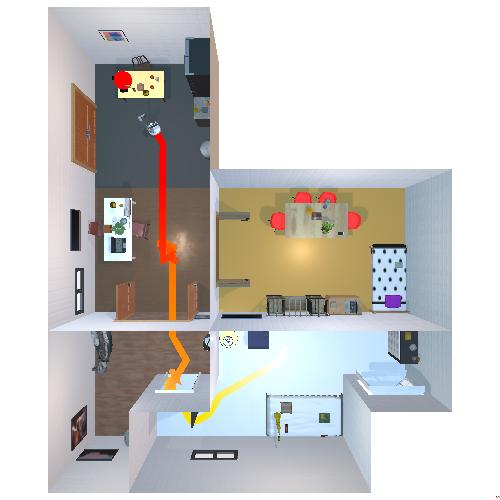

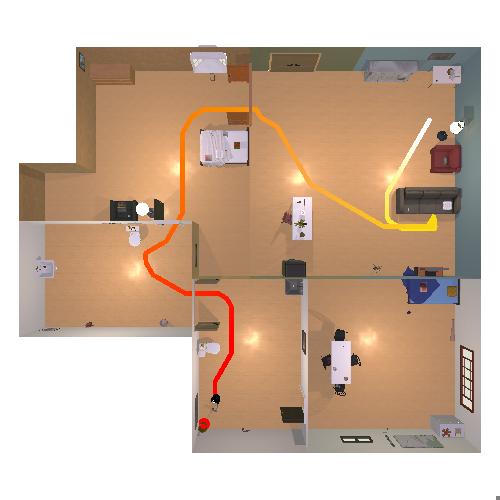

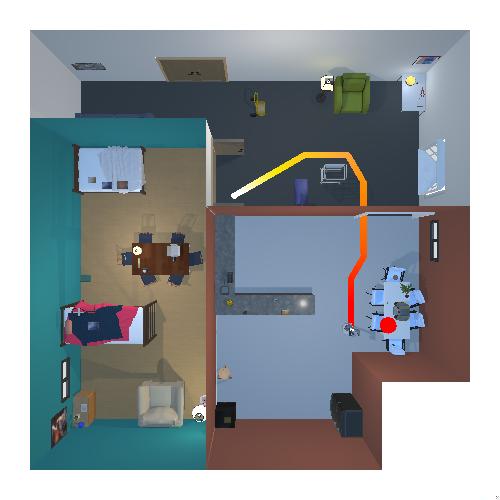

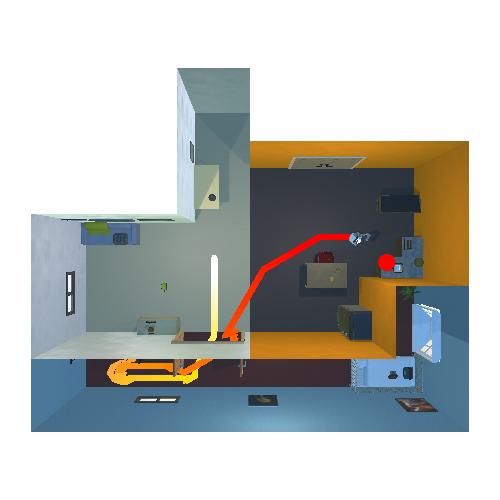

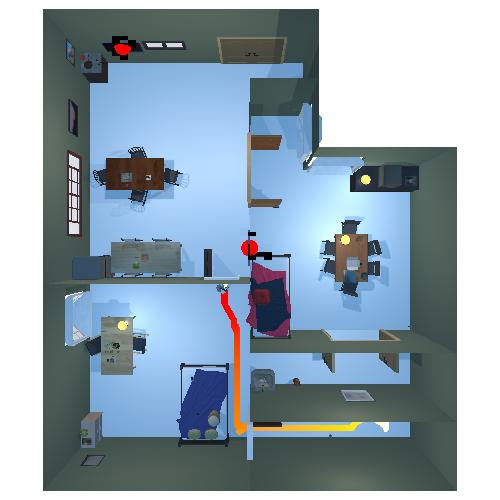

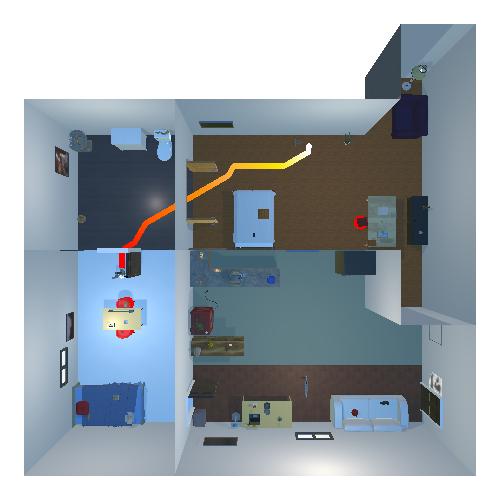

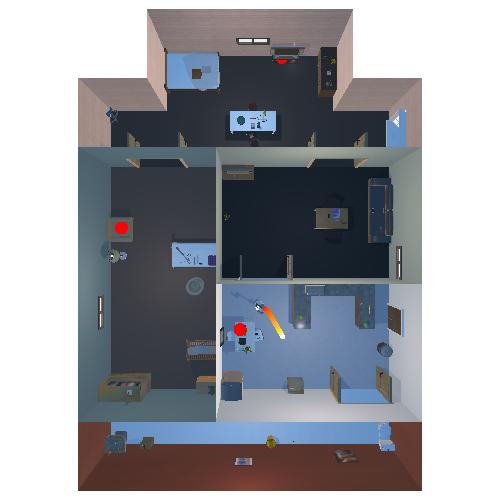

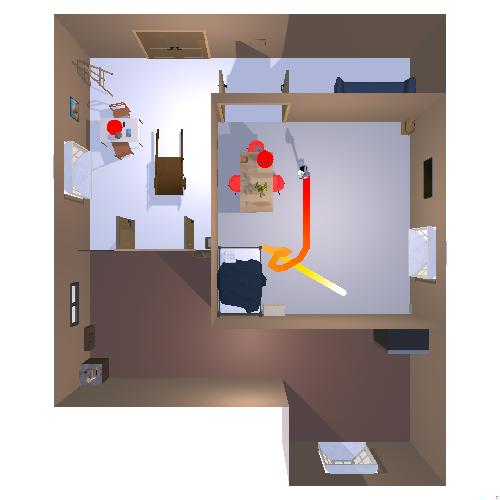

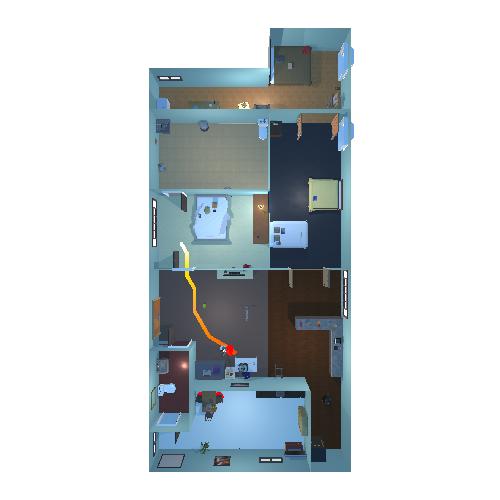

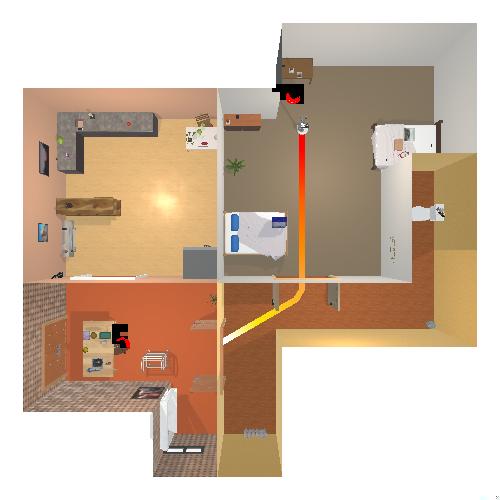

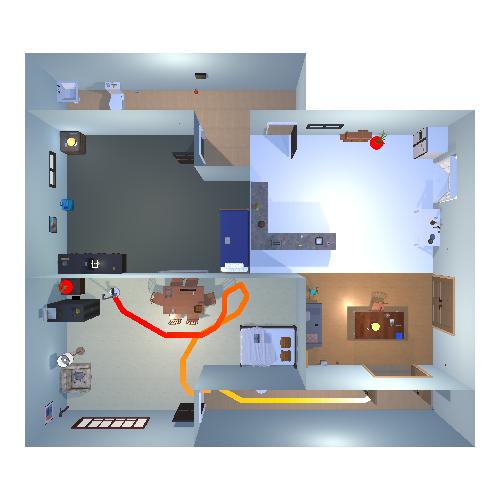

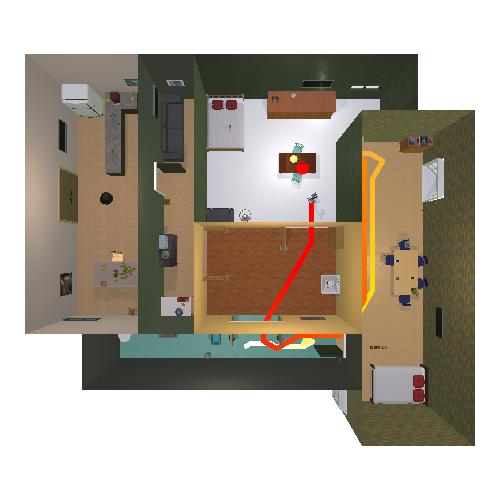

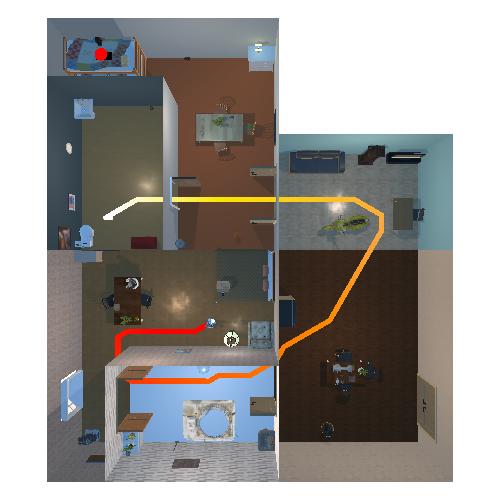

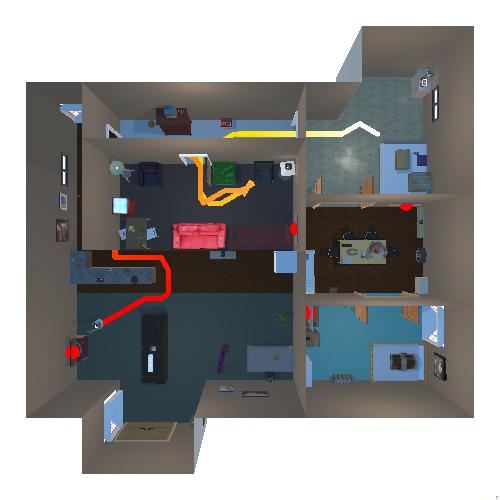

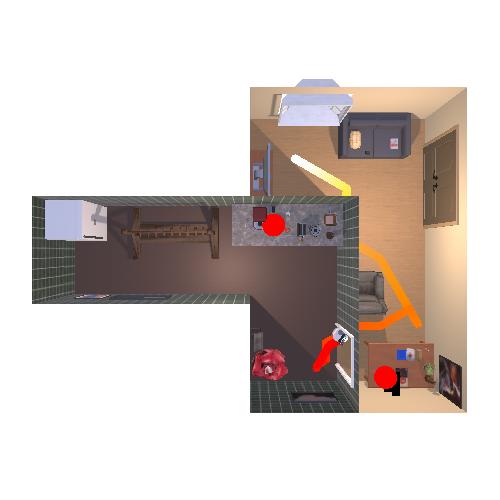

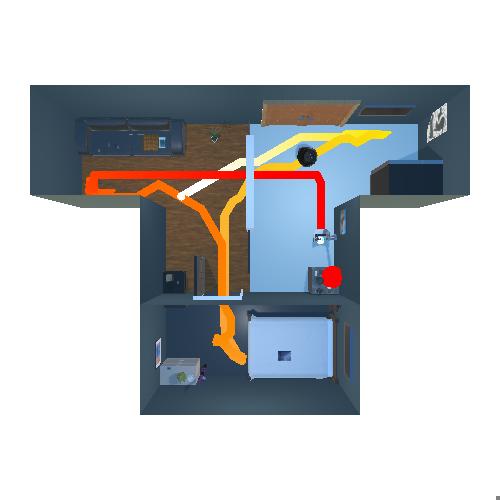

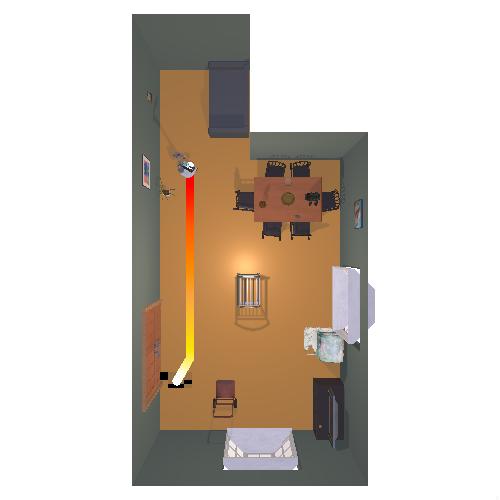

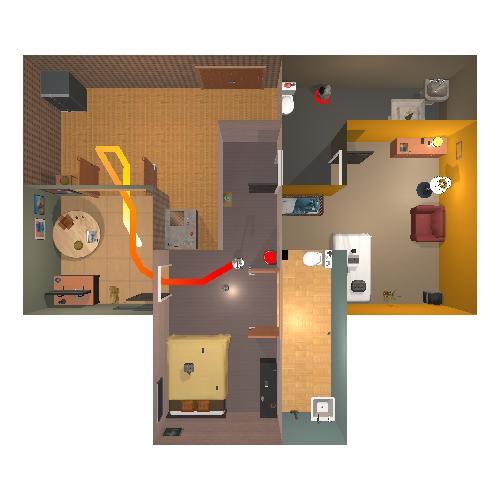

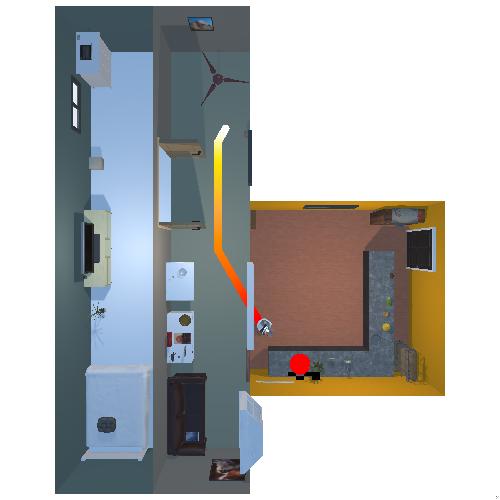

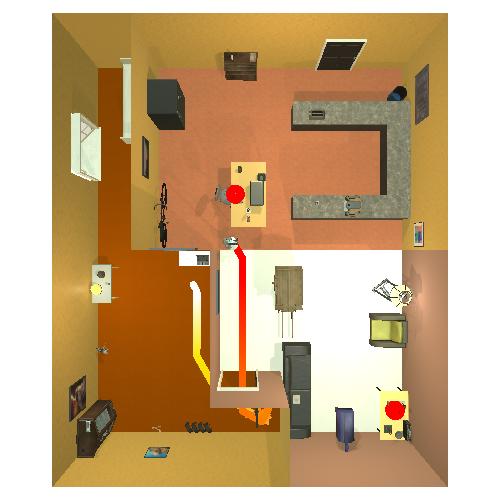

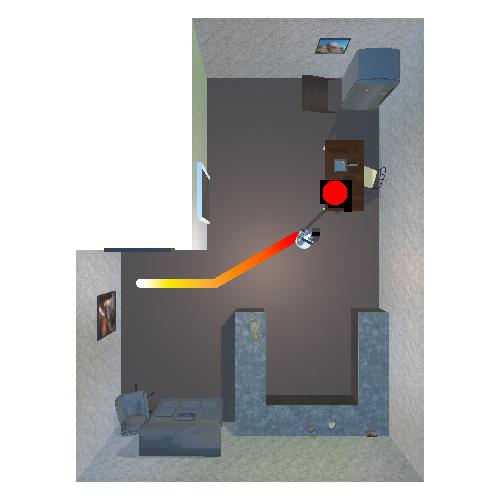

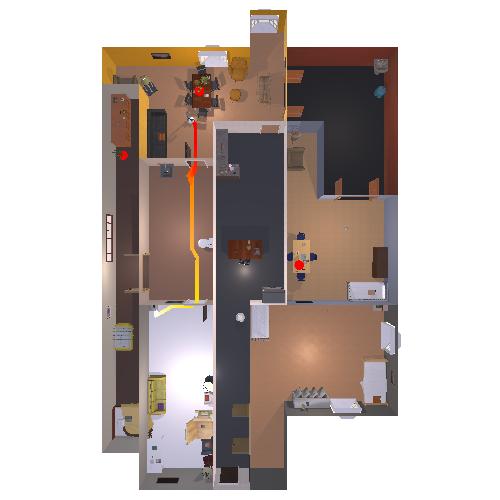

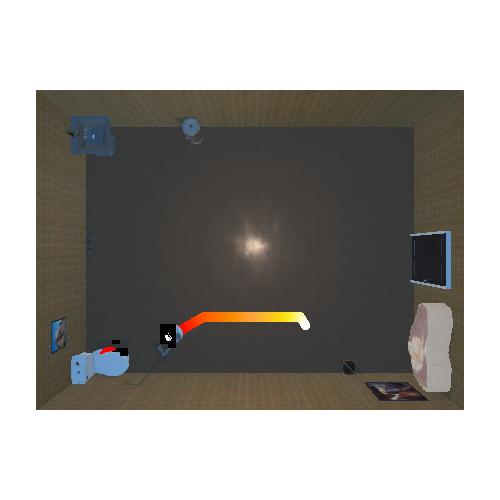

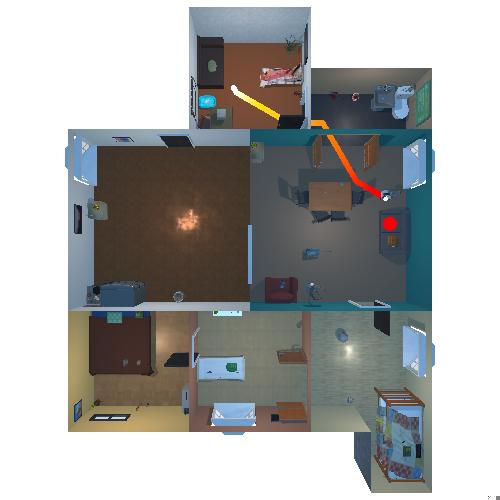

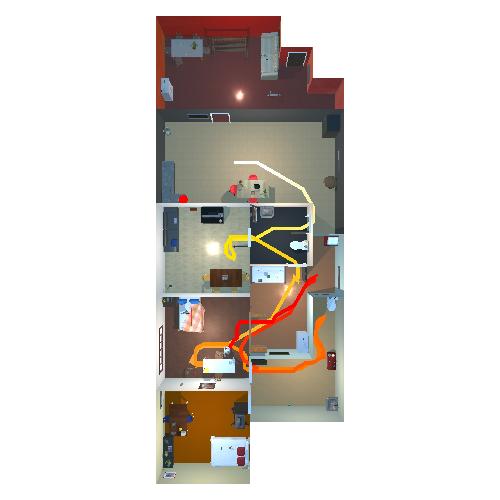

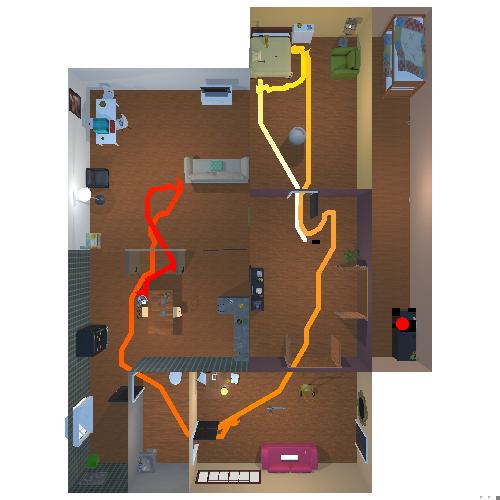

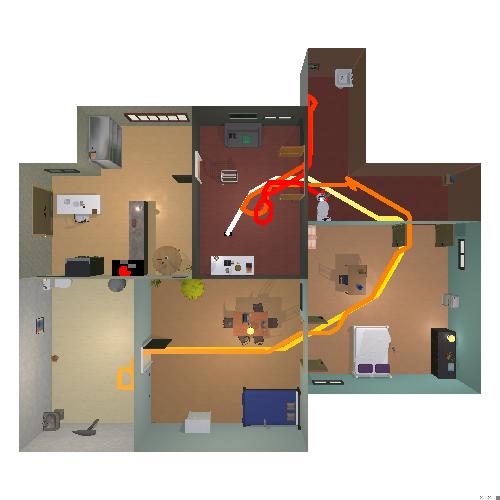

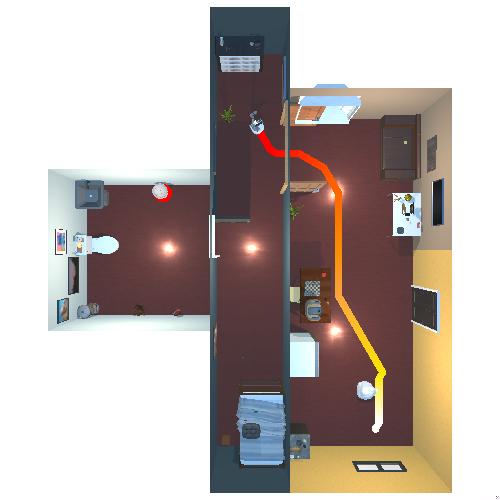

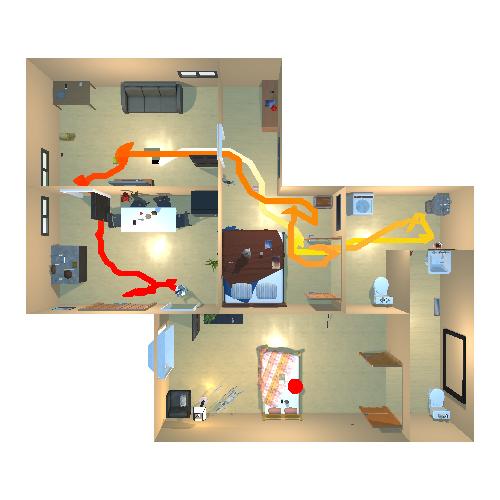

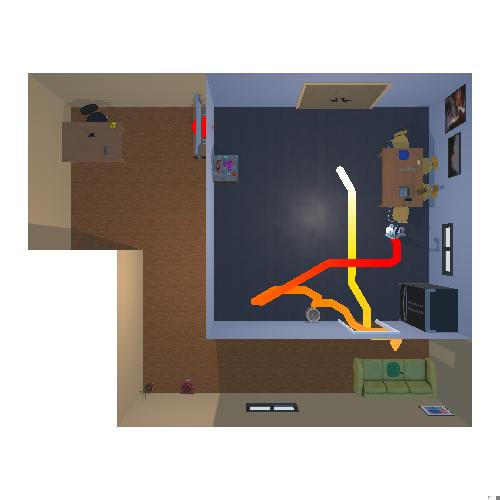

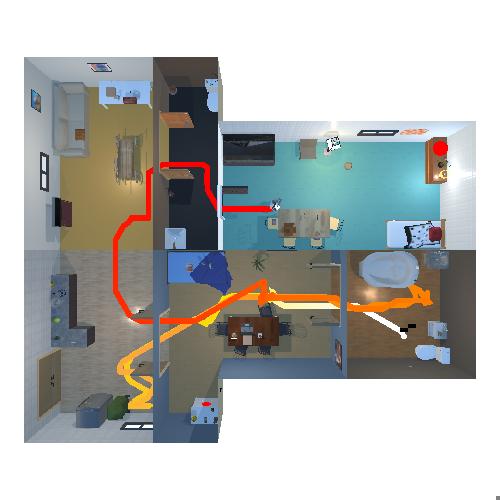

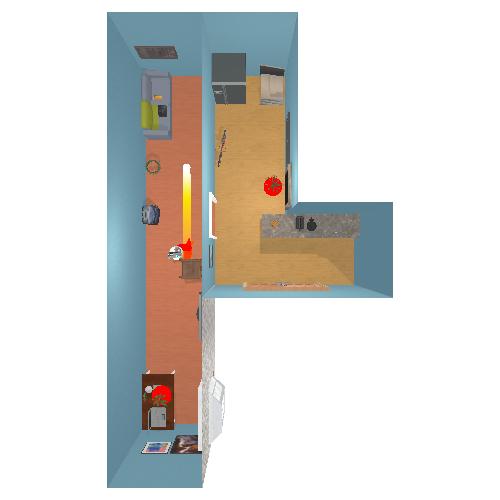

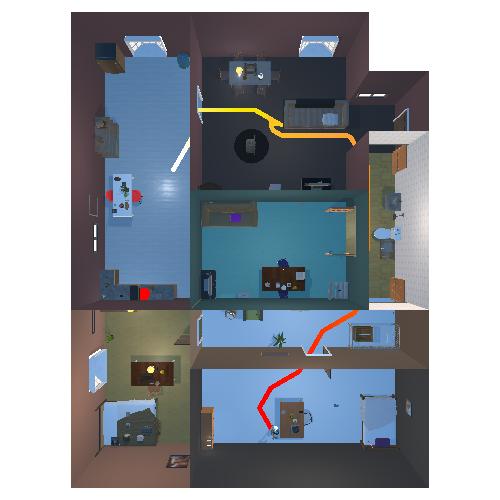

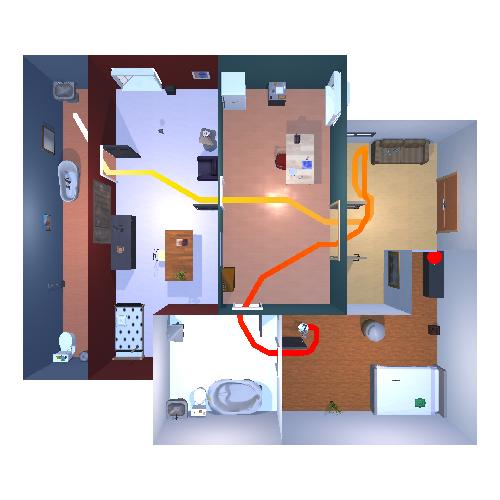

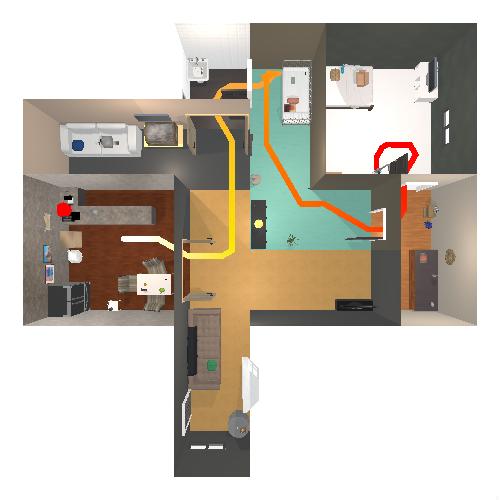

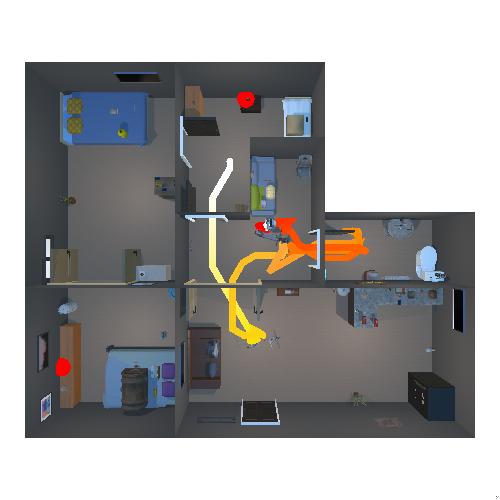

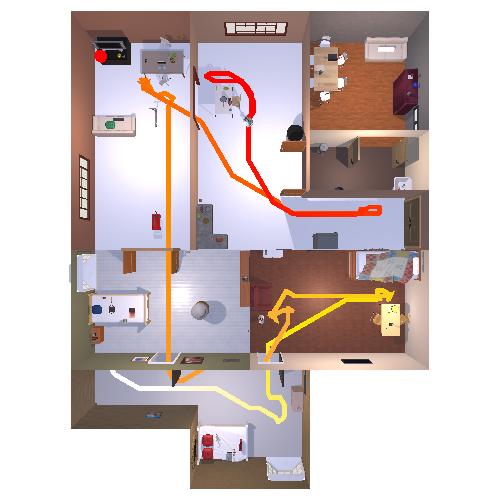

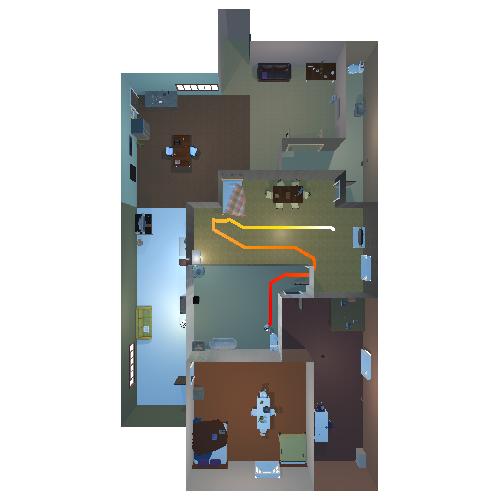

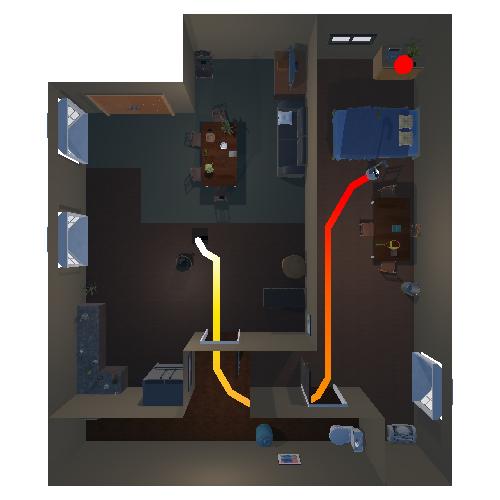

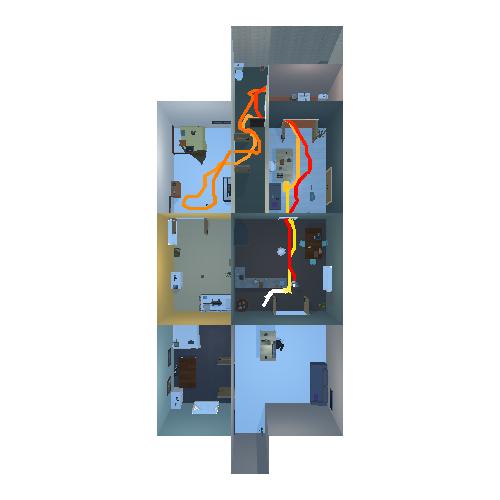

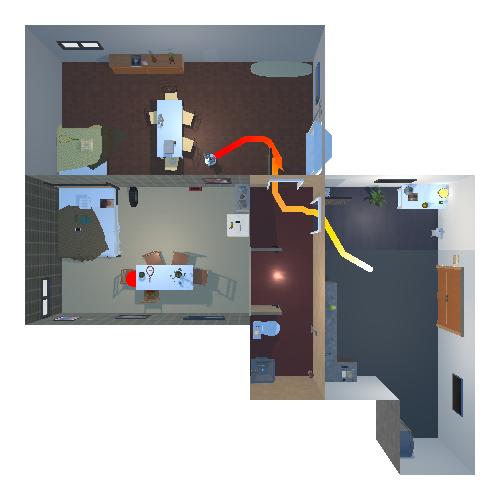

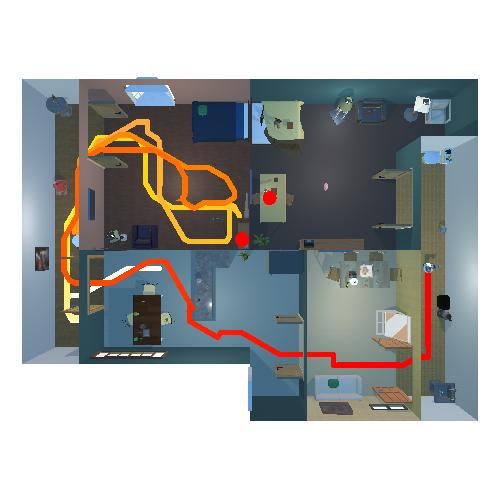

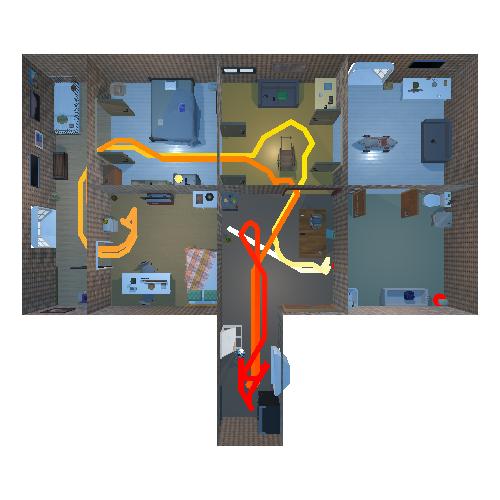

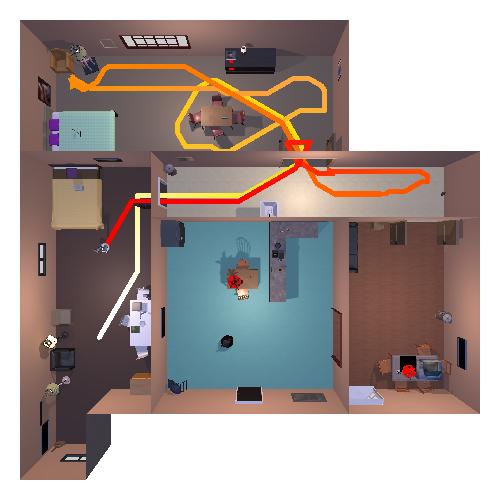

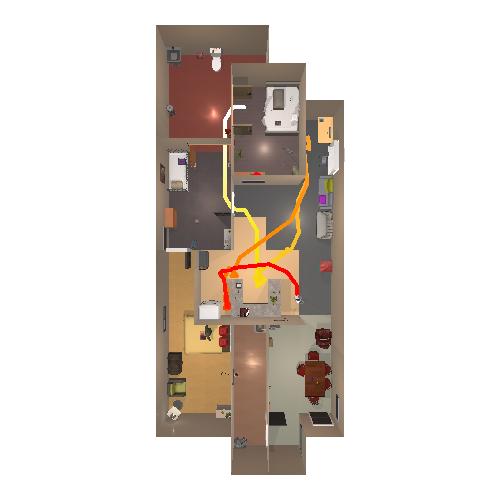

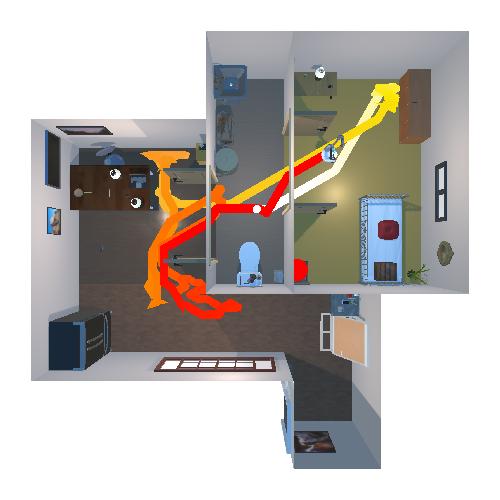

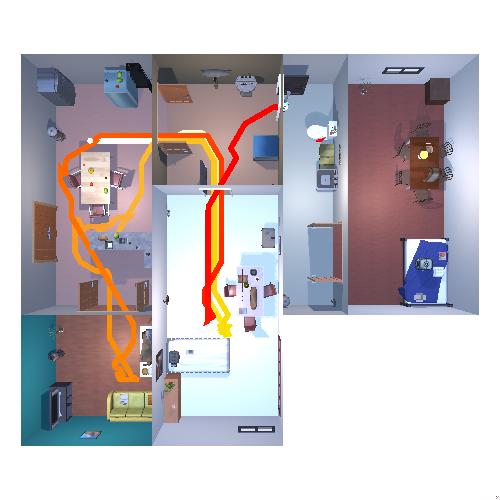

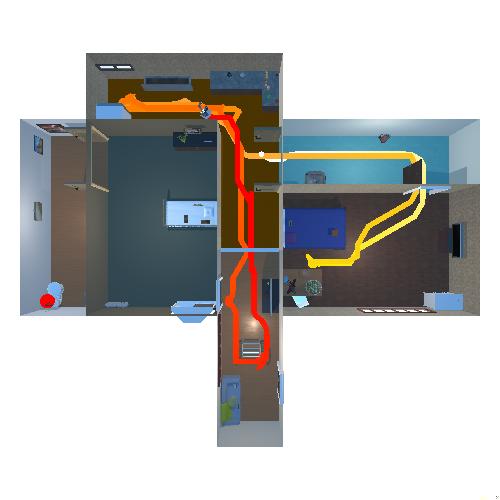

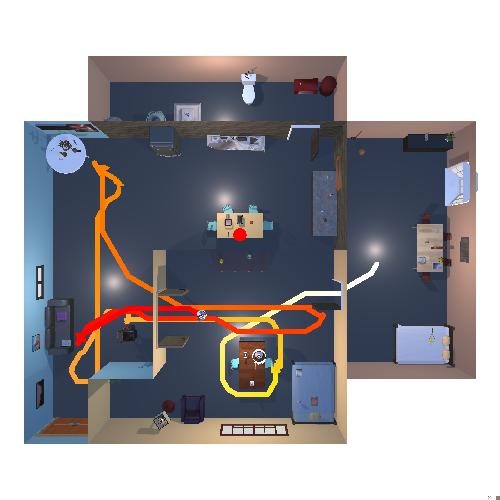

This figure shows top-down visualizations of our agent's object-navigation

trajectories on our validation set. Here we list all 200 examples within the validation set using our SPOC model

trained with ground-truth detections. The agent's path is visualized as a line that goes from white (episode start to red episode end).

We also add red markers to indicate the location of objects of the goal object category.

Target: vase, success

Target: bed, success

Target: atomizer, success

Target: basketball, success

Target: ashcan, success

Target: bed, success

Target: houseplant, success

Target: vase, success

Target: apple, success

Target: atomizer, success

Target: ashcan, success

Target: laptop, success

Target: mug, success

Target: bed, success

Target: television_receiver, success

Target: straight_chair, success

Target: atomizer, success

Target: atomizer, success

Target: ashcan, success

Target: toilet, success

Target: mug, success

Target: bowl, success

Target: ashcan, success

Target: basketball, success

Target: television_receiver, success

Target: laptop, success

Target: straight_chair, success

Target: atomizer, success

Target: vase, success

Target: bed, success

Target: bowl, success

Target: bed, success

Target: straight_chair, success

Target: mug, success

Target: laptop, success

Target: toilet, success

Target: bed, success

Target: houseplant, success

Target: bowl, success

Target: toilet, success

Target: sofa, success

Target: vase, success

Target: laptop, success

Target: television_receiver, success

Target: sofa, success

Target: basketball, success

Target: television_receiver, success

Target: toilet, success

Target: straight_chair, success

Target: basketball, success

Target: bed, success

Target: laptop, success

Target: toilet, success

Target: bowl, success

Target: mug, success

Target: ashcan, success

Target: vase, success

Target: sofa, success

Target: bed, success

Target: atomizer, success

Target: alarm_clock, success

Target: houseplant, success

Target: straight_chair, success

Target: straight_chair, success

Target: toilet, success

Target: ashcan, success

Target: laptop, success

Target: atomizer, success

Target: houseplant, success

Target: laptop, success

Target: houseplant, success

Target: bed, success

Target: houseplant, success

Target: apple, success

Target: straight_chair, success

Target: bowl, success

Target: bed, success

Target: atomizer, success

Target: houseplant, success

Target: straight_chair, success

Target: television_receiver, success

Target: sofa, success

Target: bowl, success

Target: houseplant, success

Target: alarm_clock, success

Target: atomizer, success

Target: sofa, success

Target: television_receiver, success

Target: atomizer, success

Target: atomizer, success

Target: vase, success

Target: alarm_clock, success

Target: mug, success

Target: sofa, success

Target: ashcan, success

Target: television_receiver, success

Target: sofa, success

Target: television_receiver, success

Target: toilet, success

Target: television_receiver, success

Target: toilet, success

Target: sofa, success

Target: apple, success

Target: toilet, success

Target: apple, success

Target: alarm_clock, success

Target: straight_chair, success

Target: laptop, success

Target: houseplant, success

Target: mug, success

Target: bowl, success

Target: apple, success

Target: ashcan, success

Target: apple, success

Target: atomizer, success

Target: television_receiver, success

Target: vase, success

Target: straight_chair, success

Target: toilet, success

Target: basketball, success

Target: houseplant, success

Target: apple, success

Target: television_receiver, success

Target: atomizer, success

Target: bowl, success

Target: toilet, success

Target: straight_chair, success

Target: sofa, success

Target: laptop, success

Target: straight_chair, success

Target: straight_chair, success

Target: bed, success

Target: toilet, success

Target: houseplant, success

Target: bed, success

Target: laptop, success

Target: laptop, success

Target: bed, success

Target: apple, success

Target: bowl, success

Target: television_receiver, success

Target: bowl, success

Target: mug, success

Target: mug, success

Target: sofa, success

Target: sofa, success

Target: ashcan, success

Target: ashcan, success

Target: mug, success

Target: vase, success

Target: alarm_clock, success

Target: bowl, success

Target: sofa, success

Target: houseplant, success

Target: toilet, success

Target: laptop, success

Target: apple, failure

Target: alarm_clock, failure

Target: apple, failure

Target: bowl, failure

Target: ashcan, failure

Target: basketball, failure

Target: television_receiver, failure

Target: ashcan, failure

Target: vase, failure

Target: mug, failure

Target: bed, failure

Target: alarm_clock, failure

Target: houseplant, failure

Target: apple, failure

Target: mug, failure

Target: laptop, failure

Target: basketball, failure

Target: vase, failure

Target: alarm_clock, failure

Target: mug, failure

Target: sofa, failure

Target: ashcan, failure

Target: alarm_clock, failure

Target: bowl, failure

Target: alarm_clock, failure

Target: sofa, failure

Target: basketball, failure

Target: alarm_clock, failure

Target: vase, failure

Target: mug, failure

Target: basketball, failure

Target: vase, failure

Target: ashcan, failure

Target: houseplant, failure

Target: alarm_clock, failure

Target: alarm_clock, failure

Target: alarm_clock, failure

Target: toilet, failure

Target: atomizer, failure

Target: straight_chair, failure

Target: vase, failure

Target: apple, failure

Target: apple, failure